Difference between revisions of "The individual approach"

m (→Estimating confidence intervals by Monte Carlo:) |

m (→Selecting the error model) |

||

| (467 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | == Overview == | ||

| − | + | Before we start looking at modeling a whole population at the same time, we are going to consider only one individual from that population. Much of the basic methodology for modeling one individual follows through to population modeling. We will see that when stepping up from one individual to a population, the difference is that some parameters shared by individuals are considered to be drawn from a [http://en.wikipedia.org/wiki/Probability_distribution probability distribution]. | |

| + | Let us begin with a simple example. | ||

| + | An individual receives 100mg of a drug at time $t=0$. At that time and then every hour for fifteen hours, the | ||

| + | concentration of a marker in the bloodstream is measured and plotted against time: | ||

| − | + | ::[[File:New_Individual1.png|link=]] | |

| − | + | We aim to find a mathematical model to describe what we see in the figure. The eventual goal is then to extend this approach to the ''simultaneous modeling'' of a whole population. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | <br> | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | == Model and methods for the individual approach == | ||

| + | <br> | ||

| + | ===Defining a model=== | ||

| − | + | In our example, the concentration is a ''continuous'' variable, so we will try to use continuous functions to model it. | |

| + | Different types of data (e.g., [http://en.wikipedia.org/wiki/Count_data count data], [http://en.wikipedia.org/wiki/Categorical_data categorical data], [http://en.wikipedia.org/wiki/Survival_analysis time-to-event data], etc.) require different types of models. All of these data types will be considered in due time, but for now let us concentrate on a continuous data model. | ||

| + | A model for continuous data can be represented mathematically as follows: | ||

| − | { | + | {{Equation1 |

| − | | | + | |equation=<math> |

| − | + | y_{j} = f(t_j ; \psi) + e_j, \quad \quad 1\leq j \leq n, </math> }} | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | where: | ||

| − | + | * $f$ is called the ''structural model''. It corresponds to the basic type of curve we suspect the data is following, e.g., linear, logarithmic, exponential, etc. Sometimes, a model of the associated biological processes leads to equations that define the curve's shape. | |

| + | * $(t_1,t_2,\ldots , t_n)$ is the vector of observation times. Here, $t_1 = 0$ hours and $t_n = t_{16} = 15$ hours. | ||

| − | * | + | * $\psi=(\psi_1, \psi_2, \ldots, \psi_d)$ is a vector of $d$ parameters that influences the value of $f$. |

| − | |||

| − | |||

| − | |||

| − | |||

| + | * $(e_1, e_2, \ldots, e_n)$ are called the ''residual errors''. Usually, we suppose that they come from some centered probability distribution: $\esp{e_j} =0$. | ||

| − | |||

| − | + | In fact, we usually state a continuous data model in a slightly more flexible way: | |

| − | + | {{EquationWithRef | |

| − | + | |equation=<div id="cont"><math> | |

| − | + | y_{j} = f(t_j ; \psi) + g(t_j ; \psi)\teps_j , \quad \quad 1\leq j \leq n, | |

</math></div> | </math></div> | ||

| + | |reference=(1) }} | ||

| + | where now: | ||

| − | |||

| + | <ul> | ||

| + | * $g$ is called the ''residual error model''. It may be a function of the time $t_j$ and parameters $\psi$. | ||

| − | : | + | * $(\teps_1, \teps_2, \ldots, \teps_n)$ are the ''normalized'' residual errors. We suppose that these come from a probability distribution which is centered and has unit variance: $\esp{\teps_j} = 0$ and $\var{\teps_j} =1$. |

| − | + | </ul> | |

| − | </ | ||

| + | <br> | ||

| − | + | ===Choosing a residual error model=== | |

| − | + | The choice of a residual error model $g$ is very flexible, and allows us to account for many different hypotheses we may have on the error's distribution. Let $f_j=f(t_j;\psi)$. Here are some simple error models. | |

| − | |||

| − | |||

| − | |||

| + | <ul> | ||

| + | * ''Constant error model'': $g=a$. That is, $y_j=f_j+a\teps_j$. | ||

| − | |||

| − | : | + | * ''Proportional error model'': $g=b\,f$. That is, $y_j=f_j+bf_j\teps_j$. This is for when we think the magnitude of the error is proportional to the value of the predicted value $f$. |

| − | |||

| − | |||

| − | + | * ''Combined error model'': $g=a+b f$. Here, $y_j=f_j+(a+bf_j)\teps_j$. | |

| − | : | + | * ''Alternative combined error model'': $g^2=a^2+b^2f^2$. Here, $y_j=f_j+\sqrt{a^2+b^2f_j^2}\teps_j$. |

| − | |||

| − | |||

| − | + | * ''Exponential error model'': here, the model is instead $\log(y_j)=\log(f_j) + a\teps_j$, that is, $g=a$. It is exponential in the sense that if we exponentiate, we end up with $y_j = f_j e^{a\teps_j}$. | |

| + | </ul> | ||

| − | + | <br> | |

| − | + | ===Tasks=== | |

| + | To model a vector of observations $y = (y_j,\, 1\leq j \leq n$) we must perform several tasks: | ||

| − | + | <ul> | |

| + | * Select a structural model $f$ and a residual error model $g$. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | * Estimate the model's parameters $\psi$. | ||

| − | + | * ''Assess and validate'' the selected model. | |

| + | </ul> | ||

| − | |||

| − | |||

| − | |||

| − | + | <br> | |

| + | === Selecting structural and residual error models === | ||

| − | : | + | As we are interested in [http://en.wikipedia.org/wiki/Parametric_model parametric modeling], we must choose parametric structural and residual error models. In the absence of biological (or other) information, we might suggest possible structural models just by looking at the graphs of time-evolution of the data. For example, if $y_j$ is increasing with time, we might suggest an affine, quadratic or logarithmic model, depending on the approximate trend of the data. If $y_j$ is instead decreasing ever slower to zero, an exponential model might be appropriate. |

| − | |||

| − | |||

| − | |||

| − | |||

| + | However, often we have biological (or other) information to help us make our choice. For instance, if we have a system of [http://en.wikipedia.org/wiki/Differential_equation differential equations] describing how the drug is eliminated from the body, its solution may provide the formula (i.e., structural model) we are looking for. | ||

| − | + | As for the residual error model, if it is not immediately obvious which one to choose, several can be tested in conjunction with one or several possible structural models. After parameter estimation, each structural and residual error model pair can be assessed, compared against the others, and/or validated in various ways. | |

| − | + | Now we can have a first look at parameter estimation, and further on, model assessment and validation. | |

| − | |||

| − | |||

| − | |||

| + | <br> | ||

| + | ===Parameter estimation=== | ||

| + | Given the observed data and the choice of a parametric model to describe it, our goal becomes to find the "best" parameters for the model. A traditional framework to solve this kind of problem is called [http://en.wikipedia.org/wiki/Maximum_likelihood maximum likelihood estimation] or MLE, in which the "most likely" parameters are found, given the data that was observed. | ||

| − | + | The likelihood $L$ is a function defined as: | |

| − | + | {{Equation1 | |

| − | + | |equation=<math> L(\psi ; y_1,y_2,\ldots,y_n) \ \ \eqdef \ \ \py( y_1,y_2,\ldots,y_n; \psi) , </math> }} | |

| − | : | + | i.e., the conditional [http://en.wikipedia.org/wiki/Joint_probability_distribution joint density function] of $(y_j)$ given the parameters $\psi$, but looked at as if the data are known and the parameters not. The $\hat{\psi}$ which maximizes $L$ is known as the ''maximum likelihood estimator''. |

| − | |||

| − | |||

| + | Suppose that we have chosen a structural model $f$ and residual error model $g$. If we assume for instance that $ \teps_j \sim_{i.i.d} {\cal N}(0,1)$, then the $y_j$ are independent of each other and [[#cont|(1)]] means that: | ||

| − | + | {{Equation1 | |

| + | |equation=<math> y_{j} \sim {\cal N}\left(f(t_j ; \psi) , g(t_j ; \psi)^2\right), \quad \quad 1\leq j \leq n .</math> }} | ||

| − | + | Due to this independence, the pdf of $y = (y_1, y_2, \ldots, y_n)$ is the product of the pdfs of each $y_j$: | |

| − | |||

| − | |||

| + | {{Equation1 | ||

| + | |equation=<math>\begin{eqnarray} | ||

| + | \py(y_1, y_2, \ldots y_n ; \psi) &=& \prod_{j=1}^n \pyj(y_j ; \psi) \\ \\ | ||

| + | & = & \frac{1}{\prod_{j=1}^n \sqrt{2\pi} g(t_j ; \psi)} \ {\rm exp}\left\{-\frac{1}{2} \sum_{j=1}^n \left( \displaystyle{ \frac{y_j - f(t_j ; \psi)}{g(t_j ; \psi)} }\right)^2\right\} . | ||

| + | \end{eqnarray}</math> }} | ||

| − | is the | + | This is the same thing as the likelihood function $L$ when seen as a function of $\psi$. Maximizing $L$ is equivalent to minimizing the deviance, i.e., -2 $\times$ the $\log$-likelihood ($LL$): |

| − | + | {{EquationWithRef | |

| − | + | |equation=<div id="LLL"><math>\begin{eqnarray} | |

| − | </math></div> | + | \hat{\psi} &=& \argmin{\psi} \left\{ -2 \,LL \right\}\\ |

| + | &=& \argmin{\psi} \left\{ | ||

| + | \sum_{j=1}^n \log\left(g(t_j ; \psi)^2\right) + \sum_{j=1}^n \left(\displaystyle{ \frac{y_j - f(t_j ; \psi)}{g(t_j ; \psi)} }\right)^2 \right\} . | ||

| + | \end{eqnarray}</math></div> | ||

| + | |reference=(2) }} | ||

| + | This minimization problem does not usually have an [http://en.wikipedia.org/wiki/Analytical_expression analytical solution] for nonlinear models, so an [http://en.wikipedia.org/wiki/Mathematical_optimization optimization] procedure needs to be used. | ||

| + | However, for a few specific models, analytical solutions do exist. | ||

| + | For instance, suppose we have a constant error model: $y_{j} = f(t_j ; \psi) + a \, \teps_j,\,\, 1\leq j \leq n,$ that is: $g(t_j;\psi) = a$. In practice, $f$ is not itself a function of $a$, so we can write $\psi = (\phi,a)$ and therefore: $y_{j} = f(t_j ; \phi) + a \, \teps_j.$ Thus, [[#LLL|(2)]] simplifies to: | ||

| − | === | + | {{Equation1 |

| + | |equation=<math> (\hat{\phi},\hat{a}) \ \ = \ \ \argmin{(\phi,a)} \left\{ | ||

| + | n \log(a^2) + \sum_{j=1}^n \left(\displaystyle{ \frac{y_j - f(t_j ; \phi)}{a} }\right)^2 \right\} . | ||

| + | </math> }} | ||

| − | + | The solution is then: | |

| − | |||

| − | + | {{Equation1 | |

| + | |equation=<math>\begin{eqnarray} | ||

| + | \hat{\phi} &=& \argmin{\phi} \sum_{j=1}^n \left( y_j - f(t_j ; \phi)\right)^2 \\ | ||

| + | \hat{a}^2&=& \frac{1}{n}\sum_{j=1}^n \left( y_j - f(t_j ; \hat{\phi})\right)^2 , | ||

| + | \end{eqnarray} </math> }} | ||

| + | where $\hat{a}^2$ is found by setting the [http://en.wikipedia.org/wiki/Partial_derivative partial derivative] of $-2LL$ to zero. | ||

| − | + | Whether this has an analytical solution or not depends on the form of $f$. For example, if $f(t_j;\phi)$ is just a linear function of the components of the vector $\phi$, we can represent it as a matrix $F$ whose $j$th row gives the coefficients at time $t_j$. Therefore, we have the matrix equation $y = F \phi + a \teps$. | |

| − | + | The solution for $\hat{\phi}$ is thus the least-squares one, and for $\hat{a}^2$ it is the same as before: | |

| − | \ | ||

| − | |||

| + | {{Equation1 | ||

| + | |equation=<math>\begin{eqnarray} | ||

| + | \hat{\phi} &=& (F^\prime F)^{-1} F^\prime y \\ | ||

| + | \hat{a}^2&=& \frac{1}{n}\sum_{j=1}^n \left( y_j - F_j \hat{\phi}\right)^2 . \\ | ||

| + | \end{eqnarray}</math> }} | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | <br> | |

| + | ===Computing the Fisher information matrix=== | ||

| − | : | + | The [http://en.wikipedia.org/wiki/Fisher_information Fisher information] is a way of measuring the amount of information that an observable random variable carries about an unknown parameter upon which its probability distribution depends. |

| − | |||

| − | |||

| − | + | Let $\psis $ be the true unknown value of $\psi$, and let $\hatpsi$ be the maximum likelihood estimate of $\psi$. If the observed likelihood function is sufficiently smooth, asymptotic theory for maximum-likelihood estimation holds and | |

| + | {{EquationWithRef | ||

| + | |equation=<div id="intro_individualCLT"><math> | ||

| + | I_n(\psis)^{\frac{1}{2} }(\hatpsi-\psis) \limite{n\to \infty}{} {\mathcal N}(0,\id) , | ||

| + | </math></div> | ||

| + | |reference=(3) }} | ||

| − | + | where $I_n(\psis)$ is (minus) the Hessian (i.e., the matrix of the second derivatives) of the log-likelihood: | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | {{Equation1 | ||

| + | |equation=<math>I_n(\psis)=- \displaystyle{ \frac{\partial^2}{\partial \psi \partial \psi^\prime} } LL(\psis;y_1,y_2,\ldots,y_n) | ||

| + | </math> }} | ||

| + | is the ''observed Fisher information matrix''. Here, "observed" means that it is a function of observed variables $y_1,y_2,\ldots,y_n$. | ||

| + | Thus, an estimate of the covariance of $\hatpsi$ is the inverse of the observed Fisher information matrix as expressed by the formula: | ||

| − | == | + | {{Equation1 |

| + | |equation=<math>C(\hatpsi) = - I_n(\hatpsi)^{-1} . </math> }} | ||

| − | |||

| − | |||

| − | |||

| − | |||

| + | <br> | ||

| + | ===Deriving confidence intervals for parameters=== | ||

| − | + | Let $\psi_k$ be the $k$th of $d$ components of $\psi$. Imagine that we have estimated $\psi_k$ with $\hatpsi_k$, the $k$th component of the MLE $\hatpsi$, that is, a random variable that converges to $\psi_k^{\star}$ when $n \to \infty$ under very general conditions. | |

| − | |||

| + | An estimator of its variance is the $k$th element of the diagonal of the covariance matrix $C(\hatpsi)$: | ||

| − | + | {{Equation1 | |

| − | + | |equation=<math>\widehat{\rm Var}(\hatpsi_k) = C_{kk}(\hatpsi) .</math> }} | |

| − | </math> | ||

| + | We can thus derive an estimator of its [http://en.wikipedia.org/wiki/Standard_error standard error]: | ||

| + | {{Equation1 | ||

| + | |equation=<math>\widehat{\rm s.e.}(\hatpsi_k) = \sqrt{C_{kk}(\hatpsi)} ,</math> }} | ||

| − | + | and a [http://en.wikipedia.org/wiki/Confidence_interval confidence interval] of level $1-\alpha$ for $\psi_k^\star$: | |

| + | {{Equation1 | ||

| + | |equation=<math>{\rm CI}(\psi_k^\star) = \left[\hatpsi_k + \widehat{\rm s.e.}(\hatpsi_k)\,q\left(\frac{\alpha}{2}\right), \ \hatpsi_k + \widehat{\rm s.e.}(\hatpsi_k)\,q\left(1-\frac{\alpha}{2}\right)\right] , </math> }} | ||

| − | + | where $q(w)$ is the [http://en.wikipedia.org/wiki/Quantile quantile] of order $w$ of a ${\cal N}(0,1)$ distribution. | |

| − | |||

| − | |||

| − | + | {{Remarks | |

| + | |title=Remarks | ||

| + | |text= Approximating the fraction $\hatpsi/\widehat{\rm s.e}(\hatpsi_k)$ by the normal distribution is a "good" approximation only when the number of observations $n$ is large. A better approximation should be used for small $n$. In the model $y_j = f(t_j ; \phi) + a\teps_j$, the distribution of $\hat{a}^2$ can be approximated by a [http://en.wikipedia.org/wiki/Chi-squared_distribution chi-squared distribution] with $(n-d_\phi)$ [http://en.wikipedia.org/wiki/Degrees_of_freedom_%28statistics%29 degrees of freedom], where $d_\phi$ is the dimension of $\phi$. The quantiles of the normal distribution can then be replaced by those of a [http://en.wikipedia.org/wiki/Student%27s_t-distribution Student's $t$-distribution] with $(n-d_\phi)$ degrees of freedom. | ||

| + | <!-- %$${\rm CI}(\psi_k) = [\hatpsi_k - \widehat{\rm s.e}(\hatpsi_k)q((1-\alpha)/2,n-d) , \hatpsi_k + \widehat{\rm s.e}(\hatpsi_k)q((1+\alpha)/2,n-d)]$$ --> | ||

| + | <!-- %where $q(\alpha,\nu)$ is the quantile of order $\alpha$ of a $t$-distribution with $\nu$ degrees of freedom. --> | ||

| + | }} | ||

| − | |||

| + | <br> | ||

| + | ===Deriving confidence intervals for predictions=== | ||

| − | |||

| − | |||

| − | |||

| + | The structural model $f$ can be predicted for any $t$ using the estimated value $f(t; \hatphi)$. For that $t$, we can then derive a confidence interval for $f(t,\phi)$ using the estimated variance of $\hatphi$. Indeed, as a first approximation we have: | ||

| + | {{Equation1 | ||

| + | |equation=<math> f(t ; \hatphi) \simeq f(t ; \phis) + \nabla f (t,\phis) (\hatphi - \phis) ,</math> }} | ||

| − | + | where $\nabla f(t,\phis)$ is the gradient of $f$ at $\phis$, i.e., the vector of the first-order partial derivatives of $f$ with respect to the components of $\phi$, evaluated at $\phis$. Of course, we do not actually know $\phis$, but we can estimate $\nabla f(t,\phis)$ with $\nabla f(t,\hatphi)$. The variance of $f(t ; \hatphi)$ can then be estimated by | |

| − | + | {{Equation1 | |

| + | |equation=<math> | ||

| + | \widehat{\rm Var}\left(f(t ; \hatphi)\right) \simeq \nabla f (t,\hatphi)\widehat{\rm Var}(\hatphi) \left(\nabla f (t,\hatphi) \right)^\prime . </math> }} | ||

| − | We can | + | We can then derive an estimate of the standard error of $f (t,\hatphi)$ for any $t$: |

| − | + | {{Equation1 | |

| − | + | |equation=<math>\widehat{\rm s.e.}(f(t ; \hatphi)) = \sqrt{\widehat{\rm Var}\left(f(t ; \hatphi)\right)} , </math> }} | |

| − | </math> | ||

| + | and a confidence interval of level $1-\alpha$ for $f(t ; \phi^\star)$: | ||

| − | + | {{Equation1 | |

| + | |equation=<math>{\rm CI}(f(t ; \phi^\star)) = \left[f(t ; \hatphi) + \widehat{\rm s.e.}(f(t ; \hatphi))\,q\left(\frac{\alpha}{2}\right), \ f(t ; \hatphi) + \widehat{\rm s.e.}(f(t ; \hatphi))\,q\left(1-\frac{\alpha}{2}\right)\right].</math> }} | ||

| − | |||

| − | |||

| − | |||

| − | == | + | <br> |

| + | ===Estimating confidence intervals using Monte Carlo simulation=== | ||

| + | The use of [http://en.wikipedia.org/wiki/Monte_Carlo_method Monte Carlo methods] to estimate a distribution does not require any approximation of the model. | ||

| − | + | We proceed in the following way. Suppose we have found a MLE $\hatpsi$ of $\psi$. We then simulate a data vector $y^{(1)}$ by first randomly generating the vector $\teps^{(1)}$ and then calculating for $1 \leq j \leq n$, | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | { | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |} | + | {{Equation1 |

| + | |equation=<math> y^{(1)}_j = f(t_j ;\hatpsi) + g(t_j ;\hatpsi)\teps^{(1)}_j . </math> }} | ||

| + | In a sense, this gives us an example of "new" data from the "same" model. We can then compute a new MLE $\hat{\psi}^{(1)}$ of $\psi$ using $y^{(1)}$. | ||

| + | Repeating this process $M$ times gives $M$ estimates of $\psi$ from which we can obtain an empirical estimation of the distribution of $\hatpsi$, or any quantile we like. | ||

| + | Any confidence interval for $\psi_k$ (resp. $f(t,\psi_k)$) can then be approximated by a prediction interval for $\hatpsi_k$ (resp. $f(t,\hatpsi_k)$). For instance, a two-sided confidence interval of level $1-\alpha$ for $\psi_k^\star$ can be estimated by the prediction interval | ||

| + | {{Equation1 | ||

| + | |equation=<math> [\hat{\psi}_{k,([\frac{\alpha}{2} M])} \ , \ \hat{\psi}_{k,([ (1-\frac{\alpha}{2})M])} ], </math> }} | ||

| − | + | where $[\cdot]$ denotes the [http://en.wikipedia.org/wiki/Floor_and_ceiling_functions integer part] and $(\psi_{k,(m)},\ 1 \leq m \leq M)$ the order statistic, i.e., the parameters $(\hatpsi_k^{(m)}, 1 \leq m \leq M)$ reordered so that $\hatpsi_{k,(1)} \leq \hatpsi_{k,(2)} \leq \ldots \leq \hatpsi_{k,(M)}$. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | <br> | ||

| + | ==A PK example == | ||

| + | In the real world, it is often not enough to look at the data, choose one possible model and estimate the parameters. The chosen structural model may or may not be "good" at representing the data. It may be good but the chosen residual error model bad, meaning that the overall model is poor, and so on. That is why in practice we may want to try out several structural and residual error models. After performing parameter estimation for each model, various assessment tasks can then be performed in order to conclude which model is best. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | <br> | ||

| + | ===The data=== | ||

| + | This modeling process is illustrated in detail in the following [http://en.wikipedia.org/wiki/Pharmacokinetics PK] example. Let us consider a dose D=50mg of a drug administered orally to a patient at time $t=0$. The concentration of the drug in the bloodstream is then measured at times $(t_j) = (0.5, 1,\,1.5,\,2,\,3,\,4,\,8,\,10,\,12,\,16,\,20,\,24).$ Here is the file {{Verbatim|individualFitting_data.txt}} with the data: | ||

| − | {| align= | + | {| class="wikitable" align="center" style="width: 30%;margin-left:15em" |

| − | | | + | !| Time || Concentration |

| + | |- | ||

| + | |0.5 || 0.94 | ||

| + | |- | ||

| + | | 1.0 || 1.30 | ||

| + | |- | ||

| + | | 1.5 || 1.64 | ||

| + | |- | ||

| + | | 2.0 || 3.38 | ||

| + | |- | ||

| + | | 3.0 || 3.72 | ||

|- | |- | ||

| − | | | + | | 4.0 || 3.29 |

|- | |- | ||

| − | | | + | | 8.0 || 1.31 |

|- | |- | ||

| − | | | + | | 10.0 || 0.80 |

|- | |- | ||

| − | | | + | | 12.0 || 0.39 |

|- | |- | ||

| − | | | + | | 16.0 || 0.31 |

|- | |- | ||

| − | | | + | | 20.0 || 0.10 |

| + | |- | ||

| + | | 24.0 || 0.09 | ||

|} | |} | ||

| + | We are going to perform the analyses for this example with the free statistical software [http://www.r-project.org/ {{Verbatim|R}}]. First, we import the data and plot it to have a look: | ||

| + | {| cellpadding="5" cellspacing="0" | ||

| + | | style="width: 50%" | | ||

| + | [[File:NewIndividual1.png|link=]] | ||

| + | | style="width: 50%" | {{RcodeForTable | ||

| + | |name= | ||

| + | |code= | ||

| + | <pre style="background-color: #EFEFEF; border:none"> | ||

| + | pk1=read.table("individualFitting_data.txt",header=T) | ||

| + | t=pk1$time | ||

| + | y=pk1$concentration | ||

| + | plot(t, y, xlab="time(hour)", | ||

| + | ylab="concentration(mg/l)", col="blue") | ||

| + | </pre> }} | ||

| + | |} | ||

| − | + | ||

| − | + | <br> | |

| − | + | ||

| − | + | ===Fitting two PK models=== | |

| − | + | ||

| + | We are going to consider two possible structural models that may describe the observed time-course of the concentration: | ||

| − | + | <ul> | |

| + | * A [http://en.wikipedia.org/wiki/Multi-compartment_model#Single-compartment_model one compartment model] with first-order [http://en.wikipedia.org/wiki/Absorption_%28pharmacokinetics%29 absorption] and linear elimination: | ||

| + | {{Equation1 | ||

| + | |equation=<math>\begin{eqnarray} | ||

| + | \phi_1 &=& (k_a, V, k_e) \\ | ||

| + | f_1(t ; \phi_1) &=& \frac{D\, k_a}{V(k_a-k_e)} \left( e^{-k_e \, t} - e^{-k_a \, t} \right). | ||

| + | \end{eqnarray}</math> }} | ||

| − | + | * A one compartment model with zero-order absorption and linear elimination: | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | {{Equation1 | ||

| + | |equation=<math>\begin{eqnarray} | ||

| + | \phi_2 &=& (T_{k0}, V, k_e) \\ | ||

| + | f_2(t ; \phi_2) &=& \left\{ \begin{array}{ll} | ||

| + | \displaystyle{ \frac{D}{V \,T_{k0} \, k_e} }\left( 1- e^{-k_e \, t} \right) & {\rm if }\ t\leq T_{k0} \\ | ||

| + | \displaystyle{ \frac{D}{V \,T_{k0} \, k_e} } \left( 1- e^{-k_e \, T_{k0} } \right)e^{-k_e \, (t- T_{k0})} & {\rm otherwise} . | ||

| + | \end{array} | ||

| + | \right. | ||

| + | \end{eqnarray}</math> }} | ||

| + | </ul> | ||

| + | We define each of these functions in {{Verbatim|R}}: | ||

| − | + | {{Rcode | |

| − | + | |name= | |

| + | |code= | ||

| + | <pre style="background-color: #EFEFEF; border:none"> | ||

| + | predc1=function(t,x){ | ||

| + | f=50*x[1]/x[2]/(x[1]-x[3])*(exp(-x[3]*t)-exp(-x[1]*t)) | ||

| + | return(f)} | ||

| + | predc2=function(t,x){ | ||

| + | f=50/x[1]/x[2]/x[3]*(1-exp(-x[3]*t)) | ||

| + | f[t>x[1]]=50/x[1]/x[2]/x[3]*(1-exp(-x[3]*x[1]))*exp(-x[3]*(t[t>x[1]]-x[1])) | ||

| + | return(f)} </pre> | ||

| + | }} | ||

| + | We then define two models ${\cal M}_1$ and ${\cal M}_2$ that assume (for now) constant residual error models: | ||

| − | { | + | {{Equation1 |

| − | | | + | |equation=<math>\begin{eqnarray} |

| − | + | {\cal M}_1 : \quad y_j & = & f_1(t_j ; \phi_1) + a_1\teps_j \\ | |

| + | {\cal M}_2 : \quad y_j & = & f_2(t_j ; \phi_2) + a_2\teps_j . | ||

| + | \end{eqnarray}</math> }} | ||

| − | + | We can fit these two models to our data by computing the MLE $\hatpsi_1=(\hatphi_1,\hat{a}_1)$ and $\hatpsi_2=(\hatphi_2,\hat{a}_2)$ of $\psi$ under each model: | |

| − | |||

| + | {| cellpadding="10" cellspacing="10" | ||

| + | | style="width:50%" | | ||

| + | {{RcodeForTable | ||

| + | |name= | ||

| + | |code= | ||

| + | <pre style="background-color: #EFEFEF; border:none"> | ||

| + | fmin1=function(x,y,t){ | ||

| + | f=predc1(t,x) | ||

| + | g=x[4] | ||

| + | e=sum( ((y-f)/g)^2 + log(g^2)) | ||

| + | return(e)} | ||

| + | fmin2=function(x,y,t){ | ||

| + | f=predc2(t,x) | ||

| + | g=x[4] | ||

| + | e=sum( ((y-f)/g)^2 + log(g^2)) | ||

| + | return(e)} | ||

| + | #--------- MLE -------------------------------- | ||

| + | pk.nlm1=nlm(fmin1, c(0.3,6,0.2,1), y, t, hessian="true") | ||

| + | psi1=pk.nlm1$estimate | ||

| − | + | pk.nlm2=nlm(fmin2, c(3,10,0.2,4), y, t, hessian="true") | |

| + | psi2=pk.nlm2$estimate | ||

| + | </pre> | ||

| + | }} | ||

| + | | style="width:50%" | | ||

| + | :Here are the parameter estimation results: | ||

| + | {{JustCodeForTable | ||

| + | |code= | ||

| + | <pre style="background-color: #EFEFEF; border:none; color:blue"> | ||

| + | > cat(" psi1 =",psi1,"\n\n") | ||

| + | psi1 = 0.3240916 6.001204 0.3239337 0.4366948 | ||

| − | + | > cat(" psi2 =",psi2,"\n\n") | |

| − | + | psi2 = 3.203111 8.999746 0.229977 0.2555242 | |

| − | + | </pre> }} | |

| − | |||

|} | |} | ||

| + | <br> | ||

| − | + | ===Assessing and selecting the PK model=== | |

| + | The estimated parameters $\hatphi_1$ and $\hatphi_2$ can then be used for computing the predicted concentrations $\hat{f}_1(t)$ and $\hat{f}_2(t)$ under both models at any time $t$. These curves can then be plotted over the original data and compared: | ||

| − | {| | + | {| cellpadding="5" cellspacing="0" |

| − | | style="width: 50%" | | + | | style="width:50%" | |

| − | + | [[File:New_Individual2.png|link=]] | |

| + | | style="width:50%" | | ||

| + | {{RcodeForTable | ||

| + | |name= | ||

| + | |code= | ||

| + | <pre style="background-color: #EFEFEF; border:none"> | ||

| + | tc=seq(from=0,to=25,by=0.1) | ||

| + | phi1=psi1[c(1,2,3)] | ||

| + | fc1=predc1(tc,phi1) | ||

| + | phi2=psi2[c(1,2,3)] | ||

| + | fc2=predc2(tc,phi2) | ||

| + | |||

| + | plot(t,y,ylim=c(0,4.1),xlab="time (hour)", | ||

| + | ylab="concentration (mg/l)",col = "blue") | ||

| + | lines(tc,fc1, type = "l", col = "green", lwd=2) | ||

| + | lines(tc,fc2, type = "l", col = "red", lwd=2) | ||

| + | abline(a=0,b=0,lty=2) | ||

| + | legend(13,4,c("observations","first order absorption", | ||

| + | "zero order absorption"), | ||

| + | lty=c(-1,1,1), pch=c(1,-1,-1), lwd=2, col=c("blue","green","red")) | ||

| + | </pre> }} | ||

|} | |} | ||

| + | We clearly see that a much better fit is obtained with model ${\cal M}_2$, i.e., the one assuming a zero-order absorption process. | ||

| + | |||

| + | Another useful goodness-of-fit plot is obtained by displaying the observations $(y_j)$ versus the predictions $\hat{y}_j=f(t_j ; \hatpsi)$ given by the models: | ||

| + | {| cellpadding="5" cellspacing="0" | ||

| + | | style="width:50%" | | ||

| + | [[File:individual3.png|link=]] | ||

| + | | style="width:50%" | | ||

| + | {{RcodeForTable | ||

| + | |name= | ||

| + | |code= | ||

| + | <pre style="background-color: #EFEFEF; border:none"> | ||

| + | f1=predc1(t,phi1) | ||

| + | f2=predc2(t,phi2) | ||

| + | par(mfrow= c(1,2)) | ||

| + | plot(f1,y,xlim=c(0,4),ylim=c(0,4),main="model 1") | ||

| + | abline(a=0,b=1,lty=1) | ||

| + | plot(f2,y,xlim=c(0,4),ylim=c(0,4),main="model 2") | ||

| + | abline(a=0,b=1,lty=1) | ||

| + | </pre> }} | ||

| + | |} | ||

| − | + | <br> | |

| + | ===Model selection=== | ||

| − | + | Again, ${\cal M}_2$ would seem to have a slight edge. This can be tested more analytically using the [http://en.wikipedia.org/wiki/Bayesian_information_criterion Bayesian Information Criteria] (BIC): | |

| − | |||

| − | |||

| − | |||

| + | {| cellpadding="10" cellspacing="10" | ||

| + | | style="width:50%" | | ||

| + | {{RcodeForTable | ||

| + | |name= | ||

| + | |code= | ||

| + | <pre style="background-color: #EFEFEF; border:none"> | ||

| + | deviance1=pk.nlm1$minimum + n*log(2*pi) | ||

| + | bic1=deviance1+log(n)*length(psi1) | ||

| + | deviance2=pk.nlm2$minimum + n*log(2*pi) | ||

| + | bic2=deviance2+log(n)*length(psi2) | ||

| + | </pre> }} | ||

| + | | style="width:50%" | | ||

| + | {{JustCodeForTable | ||

| + | |code= | ||

| + | <pre style="background-color: #EFEFEF; border:none; color:blue"> | ||

| + | > cat(" bic1 =",bic1,"\n\n") | ||

| + | bic1 = 24.10972 | ||

| − | + | > cat(" bic2 =",bic2,"\n\n") | |

| + | bic2 = 11.24769 | ||

| + | </pre> }} | ||

| + | |} | ||

| − | \ | + | A smaller BIC is better. Therefore, this also suggests that model ${\cal M}_2$ should be selected. |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | <br> | |

| − | + | ||

| + | ===Fitting different error models=== | ||

| + | |||

| + | |||

| + | For the moment, we have only considered constant error models. However, the "observations vs predictions" figure hints that the amplitude of the residual errors may increase with the size of the predicted value. Let us therefore take a closer look at four different residual error models, each of which we will associate with the "best" structural model $f_2$: | ||

| − | + | {| cellpadding="2" cellspacing="8" style="text-align:left; margin-left:4%" | |

| − | { | + | |${\cal M}_2$ || Constant error model: || $y_j=f_2(t_j;\phi_2)+a_2\teps_j$ |

| − | + | |- | |

| − | + | |${\cal M}_3$ || Proportional error model: || $y_j=f_2(t_j;\phi_3)+b_3f_2(t_j;\phi_3)\teps_j$ | |

| − | } | + | |- |

| + | |${\cal M}_4$ || Combined error model: || $y_j=f_2(t_j;\phi_4)+(a_4+b_4f_2(t_j;\phi_4))\teps_j$ | ||

| + | |- | ||

| + | |${\cal M}_5$ || Exponential error model: || $\log(y_j)=\log(f_2(t_j;\phi_5)) + a_5\teps_j$. | ||

| + | |} | ||

| − | + | The three new ones need to be entered into {{Verbatim|R}}: | |

| − | { | ||

| − | |||

| − | |||

| − | } | ||

| − | |||

| − | |||

| − | + | {{Rcode | |

| + | |name= | ||

| + | |code= | ||

| + | <pre style="background-color: #EFEFEF; border:none"> | ||

| + | fmin3=function(x,y,t){ | ||

| + | f=predc2(t,x) | ||

| + | g=x[4]*f | ||

| + | e=sum( ((y-f)/g)^2 + log(g^2)) | ||

| + | return(e)} | ||

| − | + | fmin4=function(x,y,t){ | |

| + | f=predc2(t,x) | ||

| + | g=abs(x[4])+abs(x[5])*f | ||

| + | e=sum( ((y-f)/g)^2 + log(g^2)) | ||

| + | return(e)} | ||

| − | + | fmin5=function(x,y,t){ | |

| − | + | f=predc2(t,x) | |

| − | + | g=x[4] | |

| − | g=x[4] | + | e=sum( ((log(y)-log(f))/g)^2 + log(g^2)) |

| − | e=sum( ((y-f)/g)^2 + log(g^2)) | + | return(e)} |

| − | } | + | </pre> }} |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | We can now compute the MLE $\hatpsi_3=(\hatphi_3,\hat{b}_3)$, $\hatpsi_4=(\hatphi_4,\hat{a}_4,\hat{b}_4)$ and $\hatpsi_5=(\hatphi_5,\hat{a}_5)$ of $\psi$ under models ${\cal M}_3$, ${\cal M}_4$ and ${\cal M}_5$: | |

| − | |||

| − | |||

| − | |||

| − | } | ||

| + | {| cellpadding="10" cellspacing="10" | ||

| + | |style="width:50%" | | ||

| + | {{RcodeForTable | ||

| + | |name= | ||

| + | |code= | ||

| + | <pre style="background-color: #EFEFEF; border:none"> | ||

#---------------- MLE ------------------- | #---------------- MLE ------------------- | ||

| − | pk.nlm3=nlm(fmin3, c(phi2,0.1), y, t, hessian="true") | + | pk.nlm3=nlm(fmin3, c(phi2,0.1), y, t, |

| + | hessian="true") | ||

psi3=pk.nlm3$estimate | psi3=pk.nlm3$estimate | ||

| − | pk.nlm4=nlm(fmin4, c(phi2,1,0.1), y, t, hessian="true") | + | pk.nlm4=nlm(fmin4, c(phi2,1,0.1), y, t, |

| + | hessian="true") | ||

psi4=pk.nlm4$estimate | psi4=pk.nlm4$estimate | ||

psi4[c(4,5)]=abs(psi4[c(4,5)]) | psi4[c(4,5)]=abs(psi4[c(4,5)]) | ||

| − | pk.nlm5=nlm(fmin5, c(phi2,0.1), y, t, hessian="true") | + | pk.nlm5=nlm(fmin5, c(phi2,0.1), y, t, |

| − | psi5=pk.nlm5$estimate | + | hessian="true") |

| − | + | psi5=pk.nlm5$estimate | |

| − | + | </pre> }} | |

| − | + | |style="width:50%" | | |

| − | + | {{JustCodeForTable | |

| + | |code=<pre style="background-color: #EFEFEF; border:none; color:blue"> | ||

> cat(" psi3 =",psi3,"\n\n") | > cat(" psi3 =",psi3,"\n\n") | ||

psi3 = 2.642409 11.44113 0.1838779 0.2189221 | psi3 = 2.642409 11.44113 0.1838779 0.2189221 | ||

| Line 494: | Line 619: | ||

> cat(" psi5 =",psi5,"\n\n") | > cat(" psi5 =",psi5,"\n\n") | ||

psi5 = 2.710984 11.2744 0.188901 0.2310001 | psi5 = 2.710984 11.2744 0.188901 0.2310001 | ||

| + | </pre> }} | ||

| + | |} | ||

| − | |||

| − | + | <br> | |

| − | + | ===Selecting the error model=== | |

| − | + | As before, these curves can be plotted over the original data and compared: | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | plot(t,y,ylim=c(0,4.1),xlab="time (hour)",ylab="concentration (mg/l)",col = "blue" | + | {| cellpadding="5" cellspacing="0" |

| − | + | |style="width=50%"| | |

| + | [[File:New_Individual4.png|link=]] | ||

| + | |style="width=50%"| | ||

| + | {{RcodeForTable | ||

| + | |name= | ||

| + | |code= | ||

| + | <pre style="background-color: #EFEFEF; border:none"> | ||

| + | phi3=psi3[c(1,2,3)] | ||

| + | fc3=predc2(tc,phi3) | ||

| + | phi4=psi4[c(1,2,3)] | ||

| + | fc4=predc2(tc,phi4) | ||

| + | phi5=psi5[c(1,2,3)] | ||

| + | fc5=predc2(tc,phi5) | ||

| + | |||

| + | par(mfrow= c(1,1)) | ||

| + | plot(t,y,ylim=c(0,4.1),xlab="time (hour)",ylab="concentration (mg/l)", | ||

| + | col = "blue") | ||

lines(tc,fc2, type = "l", col = "red", lwd=2) | lines(tc,fc2, type = "l", col = "red", lwd=2) | ||

| + | lines(tc,fc3, type = "l", col = "green", lwd=2) | ||

| + | lines(tc,fc4, type = "l", col = "cyan", lwd=2) | ||

| + | lines(tc,fc5, type = "l", col = "magenta", lwd=2) | ||

abline(a=0,b=0,lty=2) | abline(a=0,b=0,lty=2) | ||

| − | legend(13,4,c("observations"," | + | legend(13,4,c("observations","constant error model", |

| − | lty=c(-1,1,1), pch=c(1,-1,-1), lwd=2, col=c("blue","green"," | + | "proportional error model","combined error model","exponential error model"), |

| − | + | lty=c(-1,1,1,1,1), pch=c(1,-1,-1,-1,-1), lwd=2, | |

| + | col=c("blue","red","green","cyan","magenta")) | ||

| + | </pre> }} | ||

| + | |} | ||

| − | \ | + | As you can see, the three predicted concentrations obtained with models ${\cal M}_3$, ${\cal M}_4$ and ${\cal M}_5$ are quite similar. We now calculate the BIC for each: |

| − | |||

| − | \ | ||

| − | |||

| − | + | {| cellpadding="10" cellspacing="10" | |

| − | + | |style="width=50%"| | |

| − | + | {{RcodeForTable | |

| − | + | |name= | |

| − | + | |code= | |

| + | <pre style="background-color: #EFEFEF; border:none"> | ||

deviance3=pk.nlm3$minimum + n*log(2*pi) | deviance3=pk.nlm3$minimum + n*log(2*pi) | ||

bic3=deviance3 + log(n)*length(psi3) | bic3=deviance3 + log(n)*length(psi3) | ||

| Line 534: | Line 676: | ||

deviance5=pk.nlm5$minimum + 2*sum(log(y)) + n*log(2*pi) | deviance5=pk.nlm5$minimum + 2*sum(log(y)) + n*log(2*pi) | ||

bic5=deviance5 + log(n)*length(psi5) | bic5=deviance5 + log(n)*length(psi5) | ||

| − | + | </pre> }} | |

| − | + | |style="width=50%"| | |

| − | + | {{JustCodeForTable | |

| + | |code= | ||

| + | <pre style="background-color: #EFEFEF; border:none; color:blue"> | ||

> cat(" bic3 =",bic3,"\n\n") | > cat(" bic3 =",bic3,"\n\n") | ||

bic3 = 3.443607 | bic3 = 3.443607 | ||

| Line 545: | Line 689: | ||

> cat(" bic5 =",bic5,"\n\n") | > cat(" bic5 =",bic5,"\n\n") | ||

bic5 = 4.108521 | bic5 = 4.108521 | ||

| + | </pre> }} | ||

| + | |} | ||

| − | + | All of these BIC are lower than the constant residual error one. BIC selects the residual error model ${\cal M}_3$ with a proportional component. | |

| − | + | There is not a large difference between these three error models, though the proportional and combined error models give the smallest and essentially identical BIC. We decide to use the combined error model ${\cal M}_4$ in the following (the same types of analysis could be done with the proportional error model). | |

| − | + | A 90% confidence interval for $\psi_4$ can derived from the Hessian (i.e., the square matrix of second-order partial derivatives) of the objective function (i.e., -2 $\times \ LL$): | |

| − | |||

| − | % | + | {| cellpadding="10" cellspacing="10" |

| − | + | |style="width=50%"| | |

| − | + | {{RcodeForTable | |

| − | + | |name= | |

| + | |code= | ||

| + | <pre style="background-color: #EFEFEF; border:none"> | ||

| + | ialpha=0.9 | ||

df=n-length(phi4) | df=n-length(phi4) | ||

I4=pk.nlm4$hessian/2 | I4=pk.nlm4$hessian/2 | ||

H4=solve(I4) | H4=solve(I4) | ||

s4=sqrt(diag(H4)*n/df) | s4=sqrt(diag(H4)*n/df) | ||

| − | delta4=s4*qt(0.5+ | + | delta4=s4*qt(0.5+ialpha/2, df) |

ci4=matrix(c(psi4-delta4,psi4+delta4),ncol=2) | ci4=matrix(c(psi4-delta4,psi4+delta4),ncol=2) | ||

| − | + | </pre> }} | |

| − | + | |style="width=50%"| | |

| − | + | {{JustCodeForTable | |

| − | + | |code= | |

| + | <pre style="background-color: #EFEFEF; border:none; color:blue"> | ||

> ci4 | > ci4 | ||

[,1] [,2] | [,1] [,2] | ||

| Line 575: | Line 724: | ||

[4,] -0.02444571 0.07927403 | [4,] -0.02444571 0.07927403 | ||

[5,] 0.04119983 0.25006660 | [5,] 0.04119983 0.25006660 | ||

| + | </pre>}} | ||

| + | |} | ||

| − | |||

| − | % | + | We can also calculate a 90% confidence interval for $f_4(t)$ using the [http://en.wikipedia.org/wiki/Central_limit_theorem Central Limit Theorem] (see [[#intro_individualCLT|(3)]]): |

| − | |||

| − | + | {{Rcode | |

| − | + | |name= | |

| − | + | |code= | |

| − | nlpredci=function(phi,f,H) | + | <pre style="background-color: #EFEFEF; border:none"> |

| − | { | + | nlpredci=function(phi,f,H){ |

| − | dphi=length(phi) | + | dphi=length(phi) |

| − | nf=length(f) | + | nf=length(f) |

| − | H=H*n/(n-dphi) | + | H=H*n/(n-dphi) |

| − | S=H[seq(1,dphi),seq(1,dphi)] | + | S=H[seq(1,dphi),seq(1,dphi)] |

| − | G=matrix(nrow=nf,ncol=dphi) | + | G=matrix(nrow=nf,ncol=dphi) |

| − | for (k in seq(1,dphi)) { | + | for (k in seq(1,dphi)) { |

| − | + | dk=phi[k]*(1e-5) | |

| − | + | phid=phi | |

| − | + | phid[k]=phi[k] + dk | |

| − | + | fd=predc2(tc,phid) | |

| − | + | G[,k]=(f-fd)/dk | |

| − | } | + | } |

| − | M=rowSums((G%*%S)*G) | + | M=rowSums((G%*%S)*G) |

| − | deltaf=sqrt(M)*qt(0.5+alpha/2,df) | + | deltaf=sqrt(M)*qt(0.5+alpha/2,df) |

| − | } | + | return(deltaf)} |

deltafc4=nlpredci(phi4,fc4,H4) | deltafc4=nlpredci(phi4,fc4,H4) | ||

| + | </pre>}} | ||

| − | + | This can then be plotted: | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | {| cellpadding="5" cellspacing="0" | |

| − | + | |style="width=50%"| | |

| − | + | [[File:NewIndividual6.png|link=]] | |

| − | + | |style="width=50%"| | |

| − | + | {{RcodeForTable | |

| + | |name= | ||

| + | |code= | ||

| + | <pre style="background-color: #EFEFEF; border:none"> | ||

| + | plot(t,y,ylim=c(0,4.5), xlab="time (hour)", | ||

| + | ylab="concentration (mg/l)", col="blue") | ||

| + | lines(tc,fc4, type = "l",col = "red",lwd=2) | ||

| + | lines(tc, fc4-deltafc4, type = "l", | ||

| + | col = "red" ,lwd=1, lty=3) | ||

| + | lines(tc,fc4+deltafc4,type = "l", | ||

| + | col = "red", lwd=1, lty=3) | ||

| + | abline(a=0,b=0,lty=2) | ||

| + | legend(10.5,4.5,c("observed concentrations", | ||

| + | "predicted concentration", | ||

| + | "CI for predicted concentration"), | ||

| + | lty=c(-1,1,3),pch=c(1,-1,-1),lwd=c(2,2,1), | ||

| + | col=c("blue","red","red")) | ||

| + | </pre> }} | ||

| + | |} | ||

| − | Alternatively, prediction intervals for $\ | + | Alternatively, prediction intervals for $\hatpsi_4$, $\hat{f}_4(t;\hatpsi_4)$ and new observations for any time $t$ can be estimated by Monte Carlo simulation: |

| − | |||

| − | + | {{Rcode | |

| + | |name= | ||

| + | |code= | ||

| + | <pre style="background-color: #EFEFEF; border:none"> | ||

f=predc2(t,phi4) | f=predc2(t,phi4) | ||

a4=psi4[4] | a4=psi4[4] | ||

| Line 668: | Line 831: | ||

par(mfrow= c(1,1)) | par(mfrow= c(1,1)) | ||

| − | plot(t,y,ylim=c(0,4.5),xlab="time (hour)",ylab="concentration (mg/l)",col = "blue") | + | plot(t,y,ylim=c(0,4.5),xlab="time (hour)", |

| + | ylab="concentration (mg/l)",col = "blue") | ||

lines(tc,fc4, type = "l", col = "red", lwd=2) | lines(tc,fc4, type = "l", col = "red", lwd=2) | ||

lines(tc,cifc4s[,1], type = "l", col = "red", lwd=1, lty=3) | lines(tc,cifc4s[,1], type = "l", col = "red", lwd=1, lty=3) | ||

| Line 675: | Line 839: | ||

lines(tc,ciy4s[,2], type = "l", col = "green", lwd=1, lty=3) | lines(tc,ciy4s[,2], type = "l", col = "green", lwd=1, lty=3) | ||

abline(a=0,b=0,lty=2) | abline(a=0,b=0,lty=2) | ||

| − | legend(10.5,4.5,c("observed concentrations","predicted concentration", | + | legend(10.5,4.5,c("observed concentrations", "predicted concentration", |

| − | " | + | "CI for predicted concentration", "CI for observed concentrations"), |

| − | pch=c(1,-1,-1,-1), lwd=c(2,2,1,1), col=c("blue","red","red","green")) | + | lty=c(-1,1,3,3), pch=c(1,-1,-1,-1), lwd=c(2,2,1,1), col=c("blue","red","red","green")) |

| − | + | </pre> }} | |

| + | |||

| − | + | {| cellpadding="5" cellspacing="0" | |

| + | |style="width=50%"| | ||

| + | [[File:NewIndividual7.png|link=]] | ||

| + | |style="width=50%"| | ||

| + | {{JustCodeForTable | ||

| + | |code= | ||

| + | <pre style="background-color: #EFEFEF; border:none; color:blue"> | ||

> ci4s | > ci4s | ||

[,1] [,2] | [,1] [,2] | ||

| Line 688: | Line 859: | ||

[4,] 5.445459e-09 0.08819339 | [4,] 5.445459e-09 0.08819339 | ||

[5,] 1.563625e-02 0.19638889 | [5,] 1.563625e-02 0.19638889 | ||

| + | </pre> }} | ||

| + | |} | ||

| − | |||

| − | + | The R code and input data used in this section can be downloaded here: {{filepath:R_IndividualFitting.rar}}. | |

| − | + | <br> | |

| − | |||

| − | + | ==Bibliography== | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | <bibtex> | ||

| + | @book{buonaccorsi2010measurement, | ||

| + | title={Measurement Error: Models, Methods, and Applications}, | ||

| + | author={Buonaccorsi, J.P.}, | ||

| + | isbn={9781420066586}, | ||

| + | lccn={2009048849}, | ||

| + | series={Chapman & Hall/CRC Interdisciplinary Statistics}, | ||

| + | url={http://books.google.fr/books?id=QVtVmaCqLHMC}, | ||

| + | year={2010}, | ||

| + | publisher={Taylor & Francis} | ||

| + | } | ||

| + | </bibtex><bibtex> | ||

| + | @book{carroll2010measurement, | ||

| + | title={Measurement Error in Nonlinear Models: A Modern Perspective, Second Edition}, | ||

| + | author={Carroll, R.J. and Ruppert, D. and Stefanski, L.A. and Crainiceanu, C.M.}, | ||

| + | isbn={9781420010138}, | ||

| + | lccn={2006045485}, | ||

| + | series={Chapman & Hall/CRC Monographs on Statistics & Applied Probability}, | ||

| + | url={http://books.google.fr/books?id=9kBx5CPZCqkC}, | ||

| + | year={2010}, | ||

| + | publisher={Taylor & Francis} | ||

| + | } | ||

| + | </bibtex> | ||

| + | <bibtex> | ||

| + | @book{fitzmaurice2004applied, | ||

| + | title={Applied Longitudinal Analysis}, | ||

| + | author={Fitzmaurice, G.M. and Laird, N.M. and Ware, J.H.}, | ||

| + | isbn={9780471214878}, | ||

| + | lccn={04040891}, | ||

| + | series={Wiley Series in Probability and Statistics}, | ||

| + | url={http://books.google.fr/books?id=gCoTIFejMgYC}, | ||

| + | year={2004}, | ||

| + | publisher={Wiley} | ||

| + | } | ||

| + | </bibtex> | ||

| + | <bibtex> | ||

| + | @book{gallant2009nonlinear, | ||

| + | title={Nonlinear Statistical Models}, | ||

| + | author={Gallant, A.R.}, | ||

| + | isbn={9780470317372}, | ||

| + | series={Wiley Series in Probability and Statistics}, | ||

| + | url={http://books.google.fr/books?id=imv-NMozseEC}, | ||

| + | year={2009}, | ||

| + | publisher={Wiley} | ||

| + | } | ||

| + | </bibtex> | ||

| + | <bibtex> | ||

| + | @book{huet2003statistical, | ||

| + | title={Statistical tools for nonlinear regression: a practical guide with S-PLUS and R examples}, | ||

| + | author={Huet, S. and Bouvier, A. and Poursat, M.A. and Jolivet, E.}, | ||

| + | year={2003}, | ||

| + | publisher={Springer} | ||

| + | } | ||

| + | </bibtex> | ||

| + | <bibtex> | ||

| + | @book{ritz2008nonlinear, | ||

| + | title={Nonlinear regression with R}, | ||

| + | author={Ritz, C. and Streibig, J.C.}, | ||

| + | volume={33}, | ||

| + | year={2008}, | ||

| + | publisher={Springer New York} | ||

| + | } | ||

| + | </bibtex> | ||

| + | <bibtex> | ||

| + | @book{ross1990nonlinear, | ||

| + | title={Nonlinear estimation}, | ||

| + | author={Ross, G.J.S.}, | ||

| + | isbn={9780387972787}, | ||

| + | lccn={90032797}, | ||

| + | series={Springer series in statistics}, | ||

| + | url={http://books.google.fr/books?id=7LkyzdLMghIC}, | ||

| + | year={1990}, | ||

| + | publisher={Springer-Verlag} | ||

| + | } | ||

| + | </bibtex> | ||

| + | <bibtex> | ||

| + | @book{seber2003nonlinear, | ||

| + | title={Nonlinear Regression}, | ||

| + | author={Seber, G.A.F. and Wild, C.J.}, | ||

| + | isbn={9780471471356}, | ||

| + | lccn={88017194}, | ||

| + | series={Wiley Series in Probability and Statistics}, | ||

| + | url={http://books.google.fr/books?id=YBYlCpBNo\_cC}, | ||

| + | year={2003}, | ||

| + | publisher={Wiley} | ||

| + | } | ||

| + | </bibtex><bibtex> | ||

| + | @article{serroyen2009nonlinear, | ||

| + | title={Nonlinear models for longitudinal data}, | ||

| + | author={Serroyen, J. and Molenberghs, G. and Verbeke, G. and Davidian, M. }, | ||

| + | journal={The American Statistician}, | ||

| + | volume={63}, | ||

| + | number={4}, | ||

| + | pages={378-388}, | ||

| + | year={2009}, | ||

| + | publisher={Taylor & Francis} | ||

| + | } | ||

| + | </bibtex> | ||

| + | <bibtex> | ||

| + | @book{wolberg2006data, | ||

| + | title={Data analysis using the method of least squares: extracting the most information from experiments}, | ||

| + | author={Wolberg, J.R.}, | ||

| + | volume={1}, | ||

| + | year={2006}, | ||

| + | publisher={Springer Berlin, Germany} | ||

| + | } | ||

| + | </bibtex> | ||

| − | |||

| − | + | {{Back&Next | |

| − | + | |linkBack=Overview | |

| − | + | |linkNext=What is a model? A joint probability distribution! }} | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Latest revision as of 14:58, 28 August 2013

Contents

- 1 Overview

- 2 Model and methods for the individual approach

- 2.1 Defining a model

- 2.2 Choosing a residual error model

- 2.3 Tasks

- 2.4 Selecting structural and residual error models

- 2.5 Parameter estimation

- 2.6 Computing the Fisher information matrix

- 2.7 Deriving confidence intervals for parameters

- 2.8 Deriving confidence intervals for predictions

- 2.9 Estimating confidence intervals using Monte Carlo simulation

- 3 A PK example

- 4 Bibliography

Overview

Before we start looking at modeling a whole population at the same time, we are going to consider only one individual from that population. Much of the basic methodology for modeling one individual follows through to population modeling. We will see that when stepping up from one individual to a population, the difference is that some parameters shared by individuals are considered to be drawn from a probability distribution.

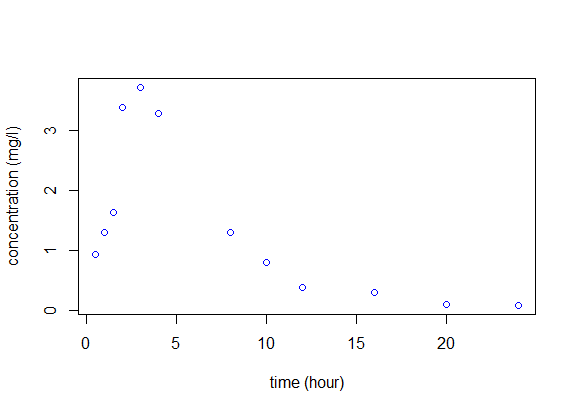

Let us begin with a simple example. An individual receives 100mg of a drug at time $t=0$. At that time and then every hour for fifteen hours, the concentration of a marker in the bloodstream is measured and plotted against time:

We aim to find a mathematical model to describe what we see in the figure. The eventual goal is then to extend this approach to the simultaneous modeling of a whole population.

Model and methods for the individual approach

Defining a model

In our example, the concentration is a continuous variable, so we will try to use continuous functions to model it. Different types of data (e.g., count data, categorical data, time-to-event data, etc.) require different types of models. All of these data types will be considered in due time, but for now let us concentrate on a continuous data model.

A model for continuous data can be represented mathematically as follows:

where:

- $f$ is called the structural model. It corresponds to the basic type of curve we suspect the data is following, e.g., linear, logarithmic, exponential, etc. Sometimes, a model of the associated biological processes leads to equations that define the curve's shape.

- $(t_1,t_2,\ldots , t_n)$ is the vector of observation times. Here, $t_1 = 0$ hours and $t_n = t_{16} = 15$ hours.

- $\psi=(\psi_1, \psi_2, \ldots, \psi_d)$ is a vector of $d$ parameters that influences the value of $f$.

- $(e_1, e_2, \ldots, e_n)$ are called the residual errors. Usually, we suppose that they come from some centered probability distribution: $\esp{e_j} =0$.

In fact, we usually state a continuous data model in a slightly more flexible way:

\(

y_{j} = f(t_j ; \psi) + g(t_j ; \psi)\teps_j , \quad \quad 1\leq j \leq n,

\)

|

(1) |

where now:

- $g$ is called the residual error model. It may be a function of the time $t_j$ and parameters $\psi$.

- $(\teps_1, \teps_2, \ldots, \teps_n)$ are the normalized residual errors. We suppose that these come from a probability distribution which is centered and has unit variance: $\esp{\teps_j} = 0$ and $\var{\teps_j} =1$.

Choosing a residual error model

The choice of a residual error model $g$ is very flexible, and allows us to account for many different hypotheses we may have on the error's distribution. Let $f_j=f(t_j;\psi)$. Here are some simple error models.

- Constant error model: $g=a$. That is, $y_j=f_j+a\teps_j$.

- Proportional error model: $g=b\,f$. That is, $y_j=f_j+bf_j\teps_j$. This is for when we think the magnitude of the error is proportional to the value of the predicted value $f$.

- Combined error model: $g=a+b f$. Here, $y_j=f_j+(a+bf_j)\teps_j$.

- Alternative combined error model: $g^2=a^2+b^2f^2$. Here, $y_j=f_j+\sqrt{a^2+b^2f_j^2}\teps_j$.

- Exponential error model: here, the model is instead $\log(y_j)=\log(f_j) + a\teps_j$, that is, $g=a$. It is exponential in the sense that if we exponentiate, we end up with $y_j = f_j e^{a\teps_j}$.

Tasks

To model a vector of observations $y = (y_j,\, 1\leq j \leq n$) we must perform several tasks:

- Select a structural model $f$ and a residual error model $g$.

- Estimate the model's parameters $\psi$.

- Assess and validate the selected model.

Selecting structural and residual error models

As we are interested in parametric modeling, we must choose parametric structural and residual error models. In the absence of biological (or other) information, we might suggest possible structural models just by looking at the graphs of time-evolution of the data. For example, if $y_j$ is increasing with time, we might suggest an affine, quadratic or logarithmic model, depending on the approximate trend of the data. If $y_j$ is instead decreasing ever slower to zero, an exponential model might be appropriate.

However, often we have biological (or other) information to help us make our choice. For instance, if we have a system of differential equations describing how the drug is eliminated from the body, its solution may provide the formula (i.e., structural model) we are looking for.

As for the residual error model, if it is not immediately obvious which one to choose, several can be tested in conjunction with one or several possible structural models. After parameter estimation, each structural and residual error model pair can be assessed, compared against the others, and/or validated in various ways.

Now we can have a first look at parameter estimation, and further on, model assessment and validation.

Parameter estimation

Given the observed data and the choice of a parametric model to describe it, our goal becomes to find the "best" parameters for the model. A traditional framework to solve this kind of problem is called maximum likelihood estimation or MLE, in which the "most likely" parameters are found, given the data that was observed.

The likelihood $L$ is a function defined as:

i.e., the conditional joint density function of $(y_j)$ given the parameters $\psi$, but looked at as if the data are known and the parameters not. The $\hat{\psi}$ which maximizes $L$ is known as the maximum likelihood estimator.

Suppose that we have chosen a structural model $f$ and residual error model $g$. If we assume for instance that $ \teps_j \sim_{i.i.d} {\cal N}(0,1)$, then the $y_j$ are independent of each other and (1) means that:

Due to this independence, the pdf of $y = (y_1, y_2, \ldots, y_n)$ is the product of the pdfs of each $y_j$:

This is the same thing as the likelihood function $L$ when seen as a function of $\psi$. Maximizing $L$ is equivalent to minimizing the deviance, i.e., -2 $\times$ the $\log$-likelihood ($LL$):

\(\begin{eqnarray}

\hat{\psi} &=& \argmin{\psi} \left\{ -2 \,LL \right\}\\

&=& \argmin{\psi} \left\{

\sum_{j=1}^n \log\left(g(t_j ; \psi)^2\right) + \sum_{j=1}^n \left(\displaystyle{ \frac{y_j - f(t_j ; \psi)}{g(t_j ; \psi)} }\right)^2 \right\} .

\end{eqnarray}\)

|

(2) |

This minimization problem does not usually have an analytical solution for nonlinear models, so an optimization procedure needs to be used.

However, for a few specific models, analytical solutions do exist.

For instance, suppose we have a constant error model: $y_{j} = f(t_j ; \psi) + a \, \teps_j,\,\, 1\leq j \leq n,$ that is: $g(t_j;\psi) = a$. In practice, $f$ is not itself a function of $a$, so we can write $\psi = (\phi,a)$ and therefore: $y_{j} = f(t_j ; \phi) + a \, \teps_j.$ Thus, (2) simplifies to:

The solution is then:

where $\hat{a}^2$ is found by setting the partial derivative of $-2LL$ to zero.

Whether this has an analytical solution or not depends on the form of $f$. For example, if $f(t_j;\phi)$ is just a linear function of the components of the vector $\phi$, we can represent it as a matrix $F$ whose $j$th row gives the coefficients at time $t_j$. Therefore, we have the matrix equation $y = F \phi + a \teps$.

The solution for $\hat{\phi}$ is thus the least-squares one, and for $\hat{a}^2$ it is the same as before:

Computing the Fisher information matrix

The Fisher information is a way of measuring the amount of information that an observable random variable carries about an unknown parameter upon which its probability distribution depends.

Let $\psis $ be the true unknown value of $\psi$, and let $\hatpsi$ be the maximum likelihood estimate of $\psi$. If the observed likelihood function is sufficiently smooth, asymptotic theory for maximum-likelihood estimation holds and

\(

I_n(\psis)^{\frac{1}{2} }(\hatpsi-\psis) \limite{n\to \infty}{} {\mathcal N}(0,\id) ,

\)

|

(3) |

where $I_n(\psis)$ is (minus) the Hessian (i.e., the matrix of the second derivatives) of the log-likelihood:

is the observed Fisher information matrix. Here, "observed" means that it is a function of observed variables $y_1,y_2,\ldots,y_n$.

Thus, an estimate of the covariance of $\hatpsi$ is the inverse of the observed Fisher information matrix as expressed by the formula:

Deriving confidence intervals for parameters

Let $\psi_k$ be the $k$th of $d$ components of $\psi$. Imagine that we have estimated $\psi_k$ with $\hatpsi_k$, the $k$th component of the MLE $\hatpsi$, that is, a random variable that converges to $\psi_k^{\star}$ when $n \to \infty$ under very general conditions.

An estimator of its variance is the $k$th element of the diagonal of the covariance matrix $C(\hatpsi)$:

We can thus derive an estimator of its standard error:

and a confidence interval of level $1-\alpha$ for $\psi_k^\star$:

where $q(w)$ is the quantile of order $w$ of a ${\cal N}(0,1)$ distribution.

Deriving confidence intervals for predictions

The structural model $f$ can be predicted for any $t$ using the estimated value $f(t; \hatphi)$. For that $t$, we can then derive a confidence interval for $f(t,\phi)$ using the estimated variance of $\hatphi$. Indeed, as a first approximation we have:

where $\nabla f(t,\phis)$ is the gradient of $f$ at $\phis$, i.e., the vector of the first-order partial derivatives of $f$ with respect to the components of $\phi$, evaluated at $\phis$. Of course, we do not actually know $\phis$, but we can estimate $\nabla f(t,\phis)$ with $\nabla f(t,\hatphi)$. The variance of $f(t ; \hatphi)$ can then be estimated by

We can then derive an estimate of the standard error of $f (t,\hatphi)$ for any $t$:

and a confidence interval of level $1-\alpha$ for $f(t ; \phi^\star)$:

Estimating confidence intervals using Monte Carlo simulation

The use of Monte Carlo methods to estimate a distribution does not require any approximation of the model.

We proceed in the following way. Suppose we have found a MLE $\hatpsi$ of $\psi$. We then simulate a data vector $y^{(1)}$ by first randomly generating the vector $\teps^{(1)}$ and then calculating for $1 \leq j \leq n$,

In a sense, this gives us an example of "new" data from the "same" model. We can then compute a new MLE $\hat{\psi}^{(1)}$ of $\psi$ using $y^{(1)}$.

Repeating this process $M$ times gives $M$ estimates of $\psi$ from which we can obtain an empirical estimation of the distribution of $\hatpsi$, or any quantile we like.

Any confidence interval for $\psi_k$ (resp. $f(t,\psi_k)$) can then be approximated by a prediction interval for $\hatpsi_k$ (resp. $f(t,\hatpsi_k)$). For instance, a two-sided confidence interval of level $1-\alpha$ for $\psi_k^\star$ can be estimated by the prediction interval

where $[\cdot]$ denotes the integer part and $(\psi_{k,(m)},\ 1 \leq m \leq M)$ the order statistic, i.e., the parameters $(\hatpsi_k^{(m)}, 1 \leq m \leq M)$ reordered so that $\hatpsi_{k,(1)} \leq \hatpsi_{k,(2)} \leq \ldots \leq \hatpsi_{k,(M)}$.

A PK example

In the real world, it is often not enough to look at the data, choose one possible model and estimate the parameters. The chosen structural model may or may not be "good" at representing the data. It may be good but the chosen residual error model bad, meaning that the overall model is poor, and so on. That is why in practice we may want to try out several structural and residual error models. After performing parameter estimation for each model, various assessment tasks can then be performed in order to conclude which model is best.

The data

This modeling process is illustrated in detail in the following PK example. Let us consider a dose D=50mg of a drug administered orally to a patient at time $t=0$. The concentration of the drug in the bloodstream is then measured at times $(t_j) = (0.5, 1,\,1.5,\,2,\,3,\,4,\,8,\,10,\,12,\,16,\,20,\,24).$ Here is the file individualFitting_data.txt with the data:

| Time | Concentration |

|---|---|

| 0.5 | 0.94 |

| 1.0 | 1.30 |

| 1.5 | 1.64 |

| 2.0 | 3.38 |

| 3.0 | 3.72 |

| 4.0 | 3.29 |

| 8.0 | 1.31 |

| 10.0 | 0.80 |

| 12.0 | 0.39 |

| 16.0 | 0.31 |

| 20.0 | 0.10 |

| 24.0 | 0.09 |

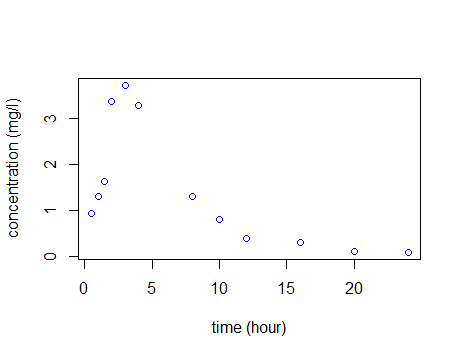

We are going to perform the analyses for this example with the free statistical software R. First, we import the data and plot it to have a look:

|

|

Fitting two PK models

We are going to consider two possible structural models that may describe the observed time-course of the concentration:

- A one compartment model with first-order absorption and linear elimination:

- A one compartment model with zero-order absorption and linear elimination:

We define each of these functions in R:

We then define two models ${\cal M}_1$ and ${\cal M}_2$ that assume (for now) constant residual error models:

We can fit these two models to our data by computing the MLE $\hatpsi_1=(\hatphi_1,\hat{a}_1)$ and $\hatpsi_2=(\hatphi_2,\hat{a}_2)$ of $\psi$ under each model:

|

Assessing and selecting the PK model

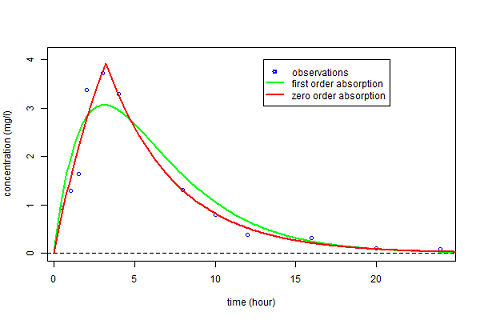

The estimated parameters $\hatphi_1$ and $\hatphi_2$ can then be used for computing the predicted concentrations $\hat{f}_1(t)$ and $\hat{f}_2(t)$ under both models at any time $t$. These curves can then be plotted over the original data and compared:

|

|

We clearly see that a much better fit is obtained with model ${\cal M}_2$, i.e., the one assuming a zero-order absorption process.

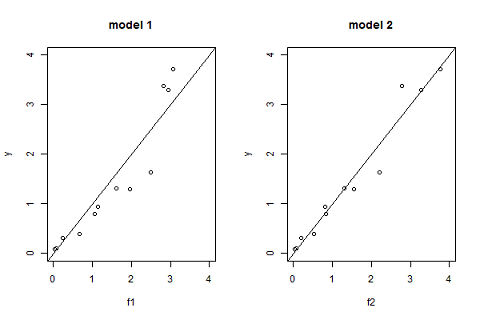

Another useful goodness-of-fit plot is obtained by displaying the observations $(y_j)$ versus the predictions $\hat{y}_j=f(t_j ; \hatpsi)$ given by the models:

|

|

Model selection

Again, ${\cal M}_2$ would seem to have a slight edge. This can be tested more analytically using the Bayesian Information Criteria (BIC):

A smaller BIC is better. Therefore, this also suggests that model ${\cal M}_2$ should be selected.

Fitting different error models

For the moment, we have only considered constant error models. However, the "observations vs predictions" figure hints that the amplitude of the residual errors may increase with the size of the predicted value. Let us therefore take a closer look at four different residual error models, each of which we will associate with the "best" structural model $f_2$:

| ${\cal M}_2$ | Constant error model: | $y_j=f_2(t_j;\phi_2)+a_2\teps_j$ |

| ${\cal M}_3$ | Proportional error model: | $y_j=f_2(t_j;\phi_3)+b_3f_2(t_j;\phi_3)\teps_j$ |

| ${\cal M}_4$ | Combined error model: | $y_j=f_2(t_j;\phi_4)+(a_4+b_4f_2(t_j;\phi_4))\teps_j$ |

| ${\cal M}_5$ | Exponential error model: | $\log(y_j)=\log(f_2(t_j;\phi_5)) + a_5\teps_j$. |

The three new ones need to be entered into R:

We can now compute the MLE $\hatpsi_3=(\hatphi_3,\hat{b}_3)$, $\hatpsi_4=(\hatphi_4,\hat{a}_4,\hat{b}_4)$ and $\hatpsi_5=(\hatphi_5,\hat{a}_5)$ of $\psi$ under models ${\cal M}_3$, ${\cal M}_4$ and ${\cal M}_5$:

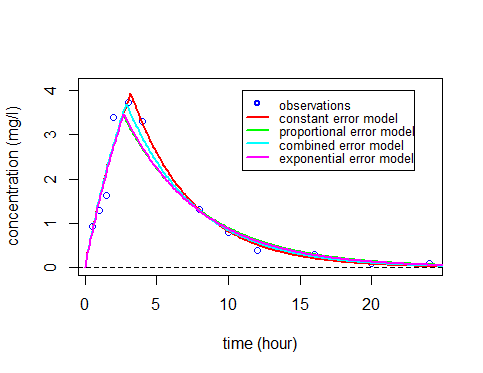

Selecting the error model

As before, these curves can be plotted over the original data and compared:

|

|

As you can see, the three predicted concentrations obtained with models ${\cal M}_3$, ${\cal M}_4$ and ${\cal M}_5$ are quite similar. We now calculate the BIC for each:

All of these BIC are lower than the constant residual error one. BIC selects the residual error model ${\cal M}_3$ with a proportional component.

There is not a large difference between these three error models, though the proportional and combined error models give the smallest and essentially identical BIC. We decide to use the combined error model ${\cal M}_4$ in the following (the same types of analysis could be done with the proportional error model).

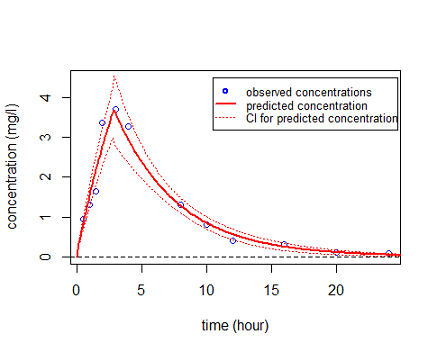

A 90% confidence interval for $\psi_4$ can derived from the Hessian (i.e., the square matrix of second-order partial derivatives) of the objective function (i.e., -2 $\times \ LL$):

We can also calculate a 90% confidence interval for $f_4(t)$ using the Central Limit Theorem (see (3)):

This can then be plotted:

|

|

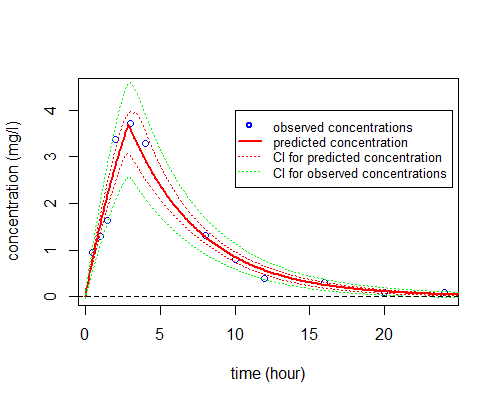

Alternatively, prediction intervals for $\hatpsi_4$, $\hat{f}_4(t;\hatpsi_4)$ and new observations for any time $t$ can be estimated by Monte Carlo simulation:

|

|

The R code and input data used in this section can be downloaded here: https://wiki.inria.fr/wikis/popix/images/a/a1/R_IndividualFitting.rar.

Bibliography

Buonaccorsi, J.P. - Measurement Error: Models, Methods, and Applications

- Taylor & Francis,2010

- http://books.google.fr/books?id=QVtVmaCqLHMC

BibtexAuthor : Buonaccorsi, J.P.

Title : Measurement Error: Models, Methods, and Applications

In : -

Address :

Date : 2010

Carroll, R.J., Ruppert, D., Stefanski, L.A., Crainiceanu, C.M. - Measurement Error in Nonlinear Models: A Modern Perspective, Second Edition

- Taylor & Francis,2010

- http://books.google.fr/books?id=9kBx5CPZCqkC

BibtexAuthor : Carroll, R.J., Ruppert, D., Stefanski, L.A., Crainiceanu, C.M.

Title : Measurement Error in Nonlinear Models: A Modern Perspective, Second Edition

In : -

Address :

Date : 2010

Fitzmaurice, G.M., Laird, N.M., Ware, J.H. - Applied Longitudinal Analysis

- Wiley,2004

- http://books.google.fr/books?id=gCoTIFejMgYC

BibtexAuthor : Fitzmaurice, G.M., Laird, N.M., Ware, J.H.

Title : Applied Longitudinal Analysis

In : -

Address :

Date : 2004

Gallant, A.R. - Nonlinear Statistical Models

- Wiley,2009

- http://books.google.fr/books?id=imv-NMozseEC

BibtexAuthor : Gallant, A.R.

Title : Nonlinear Statistical Models

In : -

Address :

Date : 2009

Huet, S., Bouvier, A., Poursat, M.A., Jolivet, E. - Statistical tools for nonlinear regression: a practical guide with S-PLUS and R examples

Ritz, C., Streibig, J.C. - Nonlinear regression with R

- Vol. 33, Springer New York,2008

- BibtexAuthor : Ritz, C., Streibig, J.C.

Title : Nonlinear regression with R

In : -

Address :

Date : 2008

Ross, G.J.S. - Nonlinear estimation

- Springer-Verlag,1990

- http://books.google.fr/books?id=7LkyzdLMghIC

BibtexAuthor : Ross, G.J.S.

Title : Nonlinear estimation

In : -

Address :

Date : 1990

Seber, G.A.F., Wild, C.J. - Nonlinear Regression

- Wiley,2003

- http://books.google.fr/books?id=YBYlCpBNo\_cC

BibtexAuthor : Seber, G.A.F., Wild, C.J.

Title : Nonlinear Regression

In : -

Address :

Date : 2003

Serroyen, J., Molenberghs, G., Verbeke, G., Davidian, M. - Nonlinear models for longitudinal data

- The American Statistician 63(4):378-388,2009

- BibtexAuthor : Serroyen, J., Molenberghs, G., Verbeke, G., Davidian, M.

Title : Nonlinear models for longitudinal data

In : The American Statistician -

Address :

Date : 2009

Wolberg, J.R. - Data analysis using the method of least squares: extracting the most information from experiments

- Vol. 1, Springer Berlin, Germany,2006

- BibtexAuthor : Wolberg, J.R.

Title : Data analysis using the method of least squares: extracting the most information from experiments

In : -

Address :

Date : 2006