Difference between revisions of "Estimation"

m |

m (→Bayesian estimation of the population parameters) |

||

| (24 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | == Introduction == | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | == | ||

In the modeling context, we usually assume that we have data that includes observations $\by$, measurement times $\bt$ and possibly additional regression variables $\bx$. There may also be individual covariates $\bc$, and in pharmacological applications the dose regimen $\bu$. For clarity, in the following notation we will omit the design variables $\bt$, $\bx$ and $\bu$, and the covariates $\bc$. | In the modeling context, we usually assume that we have data that includes observations $\by$, measurement times $\bt$ and possibly additional regression variables $\bx$. There may also be individual covariates $\bc$, and in pharmacological applications the dose regimen $\bu$. For clarity, in the following notation we will omit the design variables $\bt$, $\bx$ and $\bu$, and the covariates $\bc$. | ||

| − | Here, we find ourselves in the classical framework of incomplete data models. | + | Here, we find ourselves in the classical framework of incomplete data models. Indeed, only $\by = (y_{ij})$ is observed in the joint model $\pypsi(\by,\bpsi;\theta)$. |

Estimation tasks are common ones seen in statistics: | Estimation tasks are common ones seen in statistics: | ||

| Line 56: | Line 38: | ||

<blockquote> | <blockquote> | ||

| − | * A model, i.e., a joint distribution $\qypsi$. Depending the software used, the model can be implemented using a script or a graphical user interface. $\monolix$ is extremely flexible and allows us to combine both. It is possible for instance to code the structural model using $\mlxtran$ and use the GUI for implementing the statistical model. Whatever the options selected, the complete model can always be saved as a text file. <br><br> | + | * A model, i.e., a joint distribution $\qypsi$. Depending on the software used, the model can be implemented using a script or a graphical user interface. $\monolix$ is extremely flexible and allows us to combine both. It is possible for instance to code the structural model using $\mlxtran$ and use the [http://en.wikipedia.org/wiki/GUI GUI] for implementing the statistical model. Whatever the options selected, the complete model can always be saved as a text file. <br><br> |

| − | * Inputs $\by$, $\bc$, $\bu$ and $\bt$. All of these variables tend to be stored in a unique data file (see [[Visualization#Data exploration | Data Exploration ]] Section). <br><br> | + | * Inputs $\by$, $\bc$, $\bu$ and $\bt$. All of these variables tend to be stored in a unique data file (see the [[Visualization#Data exploration | Data Exploration ]] Section). <br><br> |

| − | * An algorithm which allows us to maximize $\int \pypsi(\by,\bpsi ;\theta) \, d \bpsi$ with respect to $\theta$. Each software package has its own algorithms implemented. It is not our goal here to rate and compare the various algorithms and implementations. We will use exclusively the SAEM algorithm as described in [[The SAEM algorithm for estimating population parameters | | + | * An algorithm which allows us to maximize $\int \pypsi(\by,\bpsi ;\theta) \, d \bpsi$ with respect to $\theta$. Each software package has its own algorithms implemented. It is not our goal here to rate and compare the various algorithms and implementations. We will use exclusively the SAEM algorithm as described in [[The SAEM algorithm for estimating population parameters | The SAEM algorithm]] and implemented in $\monolix$ as we are entirely satisfied by both its theoretical and practical qualities: <br><br> |

| − | ** The algorithms implemented in $\monolix$ including SAEM and its extensions (mixture models, hidden Markov models, SDE-based model, censored data, etc.) have been published in statistical journals. Furthermore, | + | ** The algorithms implemented in $\monolix$ including SAEM and its extensions ([[Mixture models|mixture models]], [[Hidden Markov models|hidden Markov models]], [[Stochastic differential equations based models|SDE-based model]], [http://en.wikipedia.org/wiki/Censored_data censored data], etc.) have been published in statistical journals. Furthermore, convergence of SAEM has been rigorously proved.<br><br> |

| − | ** The SAEM implementation in $\monolix$ | + | ** The SAEM implementation in $\monolix$ is extremely efficient for a wide variety of complex models.<br><br> |

| − | ** | + | ** The SAEM implementation in $\monolix$ was done by the same group that proposed the algorithm and studied in detail its theoretical and practical properties. |

</blockquote> | </blockquote> | ||

| Line 67: | Line 49: | ||

{{Remarks | {{Remarks | ||

|title=Remark | |title=Remark | ||

| − | |text= It is important to highlight the fact that for a parameter $\psi_i$ whose distribution is the | + | |text= It is important to highlight the fact that for a parameter $\psi_i$ whose distribution is the transformation of a normal one (log-normal, logit-normal, etc.) the MLE $\hat{\psi}_{\rm pop}$ of the reference parameter $\psi_{\rm pop}$ is neither the mean nor the mode of the distribution. It is in fact the median. |

To show why this is the case, let $h$ be a nonlinear, twice continuously derivable and strictly increasing function such that $h(\psi_i)$ is normally distributed. | To show why this is the case, let $h$ be a nonlinear, twice continuously derivable and strictly increasing function such that $h(\psi_i)$ is normally distributed. | ||

| Line 74: | Line 56: | ||

* First we show that it is not the mean. By definition, the MLE of $h(\psi_{\rm pop})$ is $h(\hat{\psi}_{\rm pop})$. Thus, the estimated distribution of $h(\psi_i)$ is the normal distribution with mean $h(\hat{\psi}_{\rm pop})$, but $\esp{h(\psi_i)} = h(\hat{\psi}_{\rm pop})$ implies that $\esp{\psi_i} \neq \hat{\psi}_{\rm pop}$ since $h$ is nonlinear. In other words, $\hat{\psi}_{\rm pop}$ is not the mean of the estimated distribution of $\psi_i$. | * First we show that it is not the mean. By definition, the MLE of $h(\psi_{\rm pop})$ is $h(\hat{\psi}_{\rm pop})$. Thus, the estimated distribution of $h(\psi_i)$ is the normal distribution with mean $h(\hat{\psi}_{\rm pop})$, but $\esp{h(\psi_i)} = h(\hat{\psi}_{\rm pop})$ implies that $\esp{\psi_i} \neq \hat{\psi}_{\rm pop}$ since $h$ is nonlinear. In other words, $\hat{\psi}_{\rm pop}$ is not the mean of the estimated distribution of $\psi_i$. | ||

| − | + | ||

| + | * Next we show that it is not the mode. Let $f$ be the pdf of $\psi_i$ and let $f_h$ be the pdf of $h(\psi_i)$. By definition, for any $h(t)\in \mathbb{R}$, | ||

{{Equation1 | {{Equation1 | ||

| Line 106: | Line 89: | ||

=== Example === | === Example === | ||

| − | Let us again look at the model used in [[Visualization#Model exploration | Model Visualization]] Section. For the case of a unique dose $D$ given at time $t=0$, the structural model is written: | + | Let us again look at the model used in the [[Visualization#Model exploration | Model Visualization]] Section. For the case of a unique dose $D$ given at time $t=0$, the structural model is written: |

{{Equation1 | {{Equation1 | ||

| Line 161: | Line 144: | ||

| − | Once the model is implemented, tasks such as maximum likelihood estimation can be performed using the SAEM algorithm. | + | Once the model is implemented, tasks such as maximum likelihood estimation can be performed using the SAEM algorithm. Certain settings in SAEM must be provided by the user. Even though SAEM is quite insensitive to the initial parameter values, |

| − | Certain settings in SAEM must be provided by the user. Even though SAEM is quite insensitive to the initial parameter values, | ||

it is possible to perform a preliminary sensitivity analysis in order to select "good" initial values. | it is possible to perform a preliminary sensitivity analysis in order to select "good" initial values. | ||

| − | {{ | + | {{ImageWithCaption|image=Vsaem2.png|caption=Looking for good initial values for SAEM}} |

| − | | | + | |

| − | | | + | |

| − | |||

| − | |||

Then, when we run SAEM, it converges easily and quickly to the MLE: | Then, when we run SAEM, it converges easily and quickly to the MLE: | ||

| − | + | ||

| + | {{JustCode | ||

|code=<pre style="background-color:#EFEFEF; border:none;">Estimation of the population parameters | |code=<pre style="background-color:#EFEFEF; border:none;">Estimation of the population parameters | ||

| Line 192: | Line 173: | ||

a_1 : 0.345 | a_1 : 0.345 | ||

</pre> }} | </pre> }} | ||

| − | |||

| − | |||

| − | |||

| − | Parameter estimation can therefore be seen as estimating reference values and variance of the random effects. | + | Parameter estimation can therefore be seen as estimating the reference values and variance of the random effects. |

| − | In addition to these numbers, it is important to be able to graphically represent these distributions in order to see them and therefore understand them better. In effect, the interpretation of certain parameters is not always simple. Of course, we know what a normal distribution represents and in particular its mean, median and mode, which are equal (see the distribution of $Cl$ below for instance). These measures of central tendency can be different among themselves for other asymmetric distributions such as log-normal (see the distribution of $ka$). | + | In addition to these numbers, it is important to be able to graphically represent these distributions in order to see them and therefore understand them better. In effect, the interpretation of certain parameters is not always simple. Of course, we know what a normal distribution represents and in particular its mean, median and mode, which are equal (see the distribution of $Cl$ below for instance). These measures of central tendency can be different among themselves for other asymmetric distributions such as the log-normal (see the distribution of $ka$). |

| − | Interpreting dispersion terms like $\omega_{ka}$ and $\omega_{V}$ is not obvious either when the parameter distributions are not normal. In such cases, quartiles or quantiles of order 5% and 95% (for example) may be useful for quantitively describing variability of these parameters. | + | Interpreting dispersion terms like $\omega_{ka}$ and $\omega_{V}$ is not obvious either when the parameter distributions are not normal. In such cases, quartiles or quantiles of order 5% and 95% (for example) may be useful for quantitively describing the variability of these parameters. |

| Line 241: | Line 219: | ||

<br> | <br> | ||

| − | ==Bayesian estimation== | + | ==Bayesian estimation of the population parameters== |

| − | The ''Bayesian approach'' considers $\theta$ as a random vector with a ''prior distribution'' $\qth$. We can then define the posterior distribution of $\theta$: | + | The [http://en.wikipedia.org/wiki/Bayesian_probability ''Bayesian approach''] considers $\theta$ as a random vector with a ''prior distribution'' $\qth$. We can then define the posterior distribution of $\theta$: |

{{Equation1 | {{Equation1 | ||

| Line 251: | Line 229: | ||

\end{eqnarray}</math> }} | \end{eqnarray}</math> }} | ||

| − | We can estimate this conditional distribution and derive any statistics (posterior mean, standard deviation, percentiles, etc.) or derive the so-called ''Maximum a Posteriori'' (MAP) estimate of $\theta$: | + | We can estimate this conditional distribution and derive any statistics (posterior mean, standard deviation, percentiles, etc.) or derive the so-called [http://en.wikipedia.org/wiki/Maximum_a_posteriori_estimation ''Maximum a Posteriori'' (MAP) estimate] of $\theta$: |

{{Equation1 | {{Equation1 | ||

| Line 266: | Line 244: | ||

</math> }} | </math> }} | ||

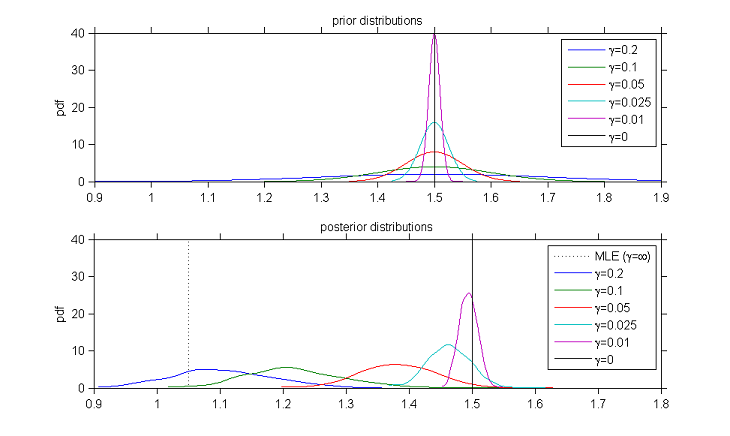

| − | The MAP estimate is a trade-off between the MLE which maximizes ${\llike}(\theta ; \by)$ and $\theta_0$ which minimizes $(\theta - \theta_0)^2$. The weight given to the prior directly depends on the variance of the prior distribution: the smaller $\gamma^2$ is, the closer to $\theta_0$ the MAP is. The limiting distribution considers that $\gamma^2=0$: this prior means here that $\theta$ is fixed as $\theta_0$ and no longer needs to be estimated. | + | The MAP estimate is a trade-off between the [http://en.wikipedia.org/wiki/Maximum_likelihood_estimation MLE] which maximizes ${\llike}(\theta ; \by)$ and $\theta_0$ which minimizes $(\theta - \theta_0)^2$. The weight given to the prior directly depends on the variance of the prior distribution: the smaller $\gamma^2$ is, the closer to $\theta_0$ the MAP is. The limiting distribution considers that $\gamma^2=0$: this prior means here that $\theta$ is fixed as $\theta_0$ and no longer needs to be estimated. |

| − | Both the Bayesian and frequentist approaches have their supporters and detractors. But rather than being dogmatic and blindly following the same rule-book every time, we need to be pragmatic and ask the right methodological questions when confronted with a new problem. | + | Both the Bayesian and [http://en.wikipedia.org/wiki/Frequentist_probability frequentist] approaches have their supporters and detractors. But rather than being dogmatic and blindly following the same rule-book every time, we need to be pragmatic and ask the right methodological questions when confronted with a new problem. |

| − | We have to remember that Bayesian methods have been extremely successful, in particular for numerical calculations. For instance, (Bayesian) MCMC methods allow us to estimate more or less any conditional distribution coming from any hierarchical model, whereas frequentist approaches such as maximum likelihood estimation can be much more difficult to implement. | + | We have to remember that Bayesian methods have been extremely successful, in particular for numerical calculations. For instance, (Bayesian) [http://en.wikipedia.org/wiki/Markov_chain_Monte_Carlo MCMC methods] allow us to estimate more or less any conditional distribution coming from any hierarchical model, whereas frequentist approaches such as maximum likelihood estimation can be much more difficult to implement. |

All things said, the problem comes down to knowing whether the data contains sufficient information to answer a given question, and whether some other information may be available to help answer it. This is the essence of the art of modeling: finding the right compromise between the confidence we have in the data and prior knowledge of the problem. Each problem is different and requires a specific approach. For instance, if all the patients in a pharmacokinetic trial have essentially the same weight, it is pointless to estimate a relationship between weight and the model's PK parameters using the trial data. In this case, the modeler would be better served trying to use prior information based on physiological criteria rather than just a statistical model. | All things said, the problem comes down to knowing whether the data contains sufficient information to answer a given question, and whether some other information may be available to help answer it. This is the essence of the art of modeling: finding the right compromise between the confidence we have in the data and prior knowledge of the problem. Each problem is different and requires a specific approach. For instance, if all the patients in a pharmacokinetic trial have essentially the same weight, it is pointless to estimate a relationship between weight and the model's PK parameters using the trial data. In this case, the modeler would be better served trying to use prior information based on physiological criteria rather than just a statistical model. | ||

| Line 341: | Line 319: | ||

| − | <div style="margin-left: | + | <div style="margin-left:15%; margin-right:32%; align:center"> |

{{{!}} class="wikitable" align="center" style="width:100%" | {{{!}} class="wikitable" align="center" style="width:100%" | ||

{{!}} $\gamma$ {{!}}{{!}} 0 {{!}}{{!}} 0.01 {{!}}{{!}} 0.025 {{!}}{{!}} 0.05 {{!}}{{!}} 0.1 {{!}}{{!}} 0.2 {{!}}{{!}} $+ \infty$ | {{!}} $\gamma$ {{!}}{{!}} 0 {{!}}{{!}} 0.01 {{!}}{{!}} 0.025 {{!}}{{!}} 0.05 {{!}}{{!}} 0.1 {{!}}{{!}} 0.2 {{!}}{{!}} $+ \infty$ | ||

| Line 348: | Line 326: | ||

{{!}}}</div> | {{!}}}</div> | ||

| − | {{ImageWithCaption|image=bayes1.png|caption= | + | {{ImageWithCaption|image=bayes1.png|caption=Prior and posterior distributions of $ka_{\rm pop}$ for different values of $\gamma$}} |

| Line 356: | Line 334: | ||

<br> | <br> | ||

| − | == | + | |

| + | == Estimation of the Fisher information matrix == | ||

The variance of the estimator $\thmle$ and thus confidence intervals can be derived from the [[Estimation of the observed Fisher information matrix|observed Fisher information matrix (F.I.M.)]], which itself is calculated using the observed likelihood (i.e., the pdf of the observations $\by$): | The variance of the estimator $\thmle$ and thus confidence intervals can be derived from the [[Estimation of the observed Fisher information matrix|observed Fisher information matrix (F.I.M.)]], which itself is calculated using the observed likelihood (i.e., the pdf of the observations $\by$): | ||

| Line 414: | Line 393: | ||

<br> | <br> | ||

| − | == | + | == Estimation of the individual parameters == |

Once $\theta$ has been estimated, the conditional distribution $\pmacro(\psi_i | y_i ; \hat{\theta})$ of the individual parameters $\psi_i$ can be estimated for each individual $i$ using the [[The Metropolis-Hastings algorithm for simulating the individual parameters| Metropolis-Hastings algorithm]]. For each $i$, this algorithm generates a sequence $(\psi_i^{k}, k \geq 1)$ which converges in distribution to the conditional distribution $\pmacro(\psi_i | y_i ; \hat{\theta})$ and that can be used for estimating any summary statistic of this distribution (mean, standard deviation, quantiles, etc.). | Once $\theta$ has been estimated, the conditional distribution $\pmacro(\psi_i | y_i ; \hat{\theta})$ of the individual parameters $\psi_i$ can be estimated for each individual $i$ using the [[The Metropolis-Hastings algorithm for simulating the individual parameters| Metropolis-Hastings algorithm]]. For each $i$, this algorithm generates a sequence $(\psi_i^{k}, k \geq 1)$ which converges in distribution to the conditional distribution $\pmacro(\psi_i | y_i ; \hat{\theta})$ and that can be used for estimating any summary statistic of this distribution (mean, standard deviation, quantiles, etc.). | ||

| Line 427: | Line 406: | ||

<br> | <br> | ||

| − | == | + | == Estimation of the observed log-likelihood == |

| Line 438: | Line 417: | ||

\end{eqnarray}</math> }} | \end{eqnarray}</math> }} | ||

| − | The observed log-likelihood cannot be computed in closed form for nonlinear mixed effects models, but can be estimated using the methods described in the [[Estimation of the log-likelihood]] Section. The estimated log-likelihood can then be used for performing likelihood ratio tests and for computing information criteria such as AIC and BIC (see the [[ | + | The observed log-likelihood cannot be computed in closed form for nonlinear mixed effects models, but can be estimated using the methods described in the [[Estimation of the log-likelihood]] Section. The estimated log-likelihood can then be used for performing likelihood ratio tests and for computing information criteria such as AIC and BIC (see the [[Model evaluation]] Section). |

| + | |||

| + | |||

| + | <br> | ||

| + | |||

| + | == Bibliography == | ||

| + | |||

| + | <bibtex> | ||

| + | @article{Monolix, | ||

| + | author = {Lixoft}, | ||

| + | title = {Monolix 4.2}, | ||

| + | year={2012} | ||

| + | journal = {http://www.lixoft.eu/products/monolix/product-monolix-overview}, | ||

| + | } | ||

| + | </bibtex> | ||

| + | |||

| + | <bibtex> | ||

| + | @article{comets2011package, | ||

| + | title={saemix: Stochastic Approximation Expectation Maximization (SAEM) algorithm. R package version 0.96.1}, | ||

| + | author={Comets, E. and Lavenu, A. and Lavielle, M.}, | ||

| + | journal = {http://cran.r-project.org/web/packages/saemix/index.html}, | ||

| + | year={2013} | ||

| + | } | ||

| + | </bibtex> | ||

| + | |||

| + | <bibtex> | ||

| + | @article{nlmefitsa, | ||

| + | title={nlmefitsa: fit nonlinear mixed-effects model with stochastic EM algorithm. Matlab R2013a function}, | ||

| + | author={The MathWorks}, | ||

| + | journal = {http://www.mathworks.fr/fr/help/stats/nlmefitsa.html}, | ||

| + | year={2013} | ||

| + | } | ||

| + | </bibtex> | ||

| + | |||

| + | <bibtex> | ||

| + | @article{beal1992nonmem, | ||

| + | title={NONMEM users guides}, | ||

| + | author={Beal, S.L. and Sheiner, L.B. and Boeckmann, A. and Bauer, R.J.}, | ||

| + | journal={San Francisco, NONMEM Project Group, University of California}, | ||

| + | year={1992} | ||

| + | } | ||

| + | </bibtex> | ||

| + | |||

| + | <bibtex> | ||

| + | @book{pinheiro2000mixed, | ||

| + | title={Mixed effects models in S and S-PLUS}, | ||

| + | author={Pinheiro, J.C. and Bates, D.M.}, | ||

| + | year={2000}, | ||

| + | publisher={Springer Verlag} | ||

| + | } | ||

| + | </bibtex> | ||

| + | |||

| + | <bibtex> | ||

| + | @article{pinheiro2010r, | ||

| + | title={the R Core team (2009) nlme: Linear and Nonlinear Mixed Effects Models. R package version 3.1-96}, | ||

| + | author={Pinheiro, J. and Bates, D. and DebRoy, S. and Sarkar, D.}, | ||

| + | journal={R Foundation for Statistical Computing, Vienna}, | ||

| + | year={2010} | ||

| + | } | ||

| + | </bibtex> | ||

| + | |||

| + | <bibtex> | ||

| + | @article{spiegelhalter2003winbugs, | ||

| + | title={WinBUGS user manual}, | ||

| + | author={Spiegelhalter, D. and Thomas, A. and Best, N. and Lunn, D.}, | ||

| + | journal={Cambridge: MRC Biostatistics Unit}, | ||

| + | year={2003} | ||

| + | } | ||

| + | </bibtex> | ||

| + | |||

| + | <bibtex> | ||

| + | @Manual{docSPSS, | ||

| + | title = {Linear mixed-effects modeling in SPSS. An introduction to the MIXED procedure}, | ||

| + | author = {SPSS}, | ||

| + | year = {2002}, | ||

| + | note={Technical Report} | ||

| + | } | ||

| + | </bibtex> | ||

| + | |||

| + | <bibtex> | ||

| + | @Manual{docSAS, | ||

| + | title = {The NLMMIXED procedure, SAS/STAT 9.2 User's Guide}, | ||

| + | chapter = {61}, | ||

| + | pages = {4337--4435}, | ||

| + | author = {SAS}, | ||

| + | year = {2008} | ||

| + | } | ||

| + | </bibtex> | ||

{{Back&Next | {{Back&Next | ||

|linkBack=Visualization | |linkBack=Visualization | ||

| − | |linkNext= | + | |linkNext=Model evaluation }} |

Latest revision as of 16:20, 25 June 2013

Contents

Introduction

In the modeling context, we usually assume that we have data that includes observations $\by$, measurement times $\bt$ and possibly additional regression variables $\bx$. There may also be individual covariates $\bc$, and in pharmacological applications the dose regimen $\bu$. For clarity, in the following notation we will omit the design variables $\bt$, $\bx$ and $\bu$, and the covariates $\bc$.

Here, we find ourselves in the classical framework of incomplete data models. Indeed, only $\by = (y_{ij})$ is observed in the joint model $\pypsi(\by,\bpsi;\theta)$.

Estimation tasks are common ones seen in statistics:

- Estimate the population parameter $\theta$ using the available observations and possibly a priori information that is available.

- Evaluate the precision of the proposed estimates.

- Reconstruct missing data, here being the individual parameters $\bpsi=(\psi_i, 1\leq i \leq N)$.

- Estimate the log-likelihood for a given model, i.e., for a given joint distribution $\qypsi$ and value of $\theta$.

Maximum likelihood estimation of the population parameters

Definitions

Maximum likelihood estimation consists of maximizing with respect to $\theta$ the observed likelihood defined by:

Maximum likelihood estimation of the population parameter $\theta$ requires:

- A model, i.e., a joint distribution $\qypsi$. Depending on the software used, the model can be implemented using a script or a graphical user interface. $\monolix$ is extremely flexible and allows us to combine both. It is possible for instance to code the structural model using $\mlxtran$ and use the GUI for implementing the statistical model. Whatever the options selected, the complete model can always be saved as a text file.

- Inputs $\by$, $\bc$, $\bu$ and $\bt$. All of these variables tend to be stored in a unique data file (see the Data Exploration Section).

- An algorithm which allows us to maximize $\int \pypsi(\by,\bpsi ;\theta) \, d \bpsi$ with respect to $\theta$. Each software package has its own algorithms implemented. It is not our goal here to rate and compare the various algorithms and implementations. We will use exclusively the SAEM algorithm as described in The SAEM algorithm and implemented in $\monolix$ as we are entirely satisfied by both its theoretical and practical qualities:

- The algorithms implemented in $\monolix$ including SAEM and its extensions (mixture models, hidden Markov models, SDE-based model, censored data, etc.) have been published in statistical journals. Furthermore, convergence of SAEM has been rigorously proved.

- The SAEM implementation in $\monolix$ is extremely efficient for a wide variety of complex models.

- The SAEM implementation in $\monolix$ was done by the same group that proposed the algorithm and studied in detail its theoretical and practical properties.

Example

Let us again look at the model used in the Model Visualization Section. For the case of a unique dose $D$ given at time $t=0$, the structural model is written:

where $Cc$ is the concentration in the central compartment and $h$ the hazard function for the event of interest (hemorrhaging). Supposing a constant error model for the concentration, the model for the observations can be easily implemented using $\mlxtran$.

Here, amtDose is a reserved keyword for the last administered dose.

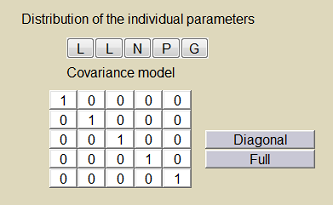

The model's parameters are the absorption rate constant $ka$, the volume of distribution $V$, the clearance $Cl$, the baseline hazard $h_0$ and the coefficient $\gamma$. The statistical model for the individual parameters can be defined in the $\monolix$ project file (left) and/or the $\monolix$ GUI (right):

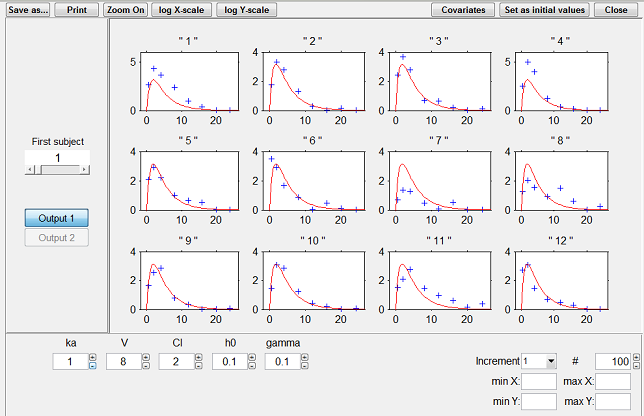

Once the model is implemented, tasks such as maximum likelihood estimation can be performed using the SAEM algorithm. Certain settings in SAEM must be provided by the user. Even though SAEM is quite insensitive to the initial parameter values, it is possible to perform a preliminary sensitivity analysis in order to select "good" initial values.

|

Looking for good initial values for SAEM

|

Then, when we run SAEM, it converges easily and quickly to the MLE:

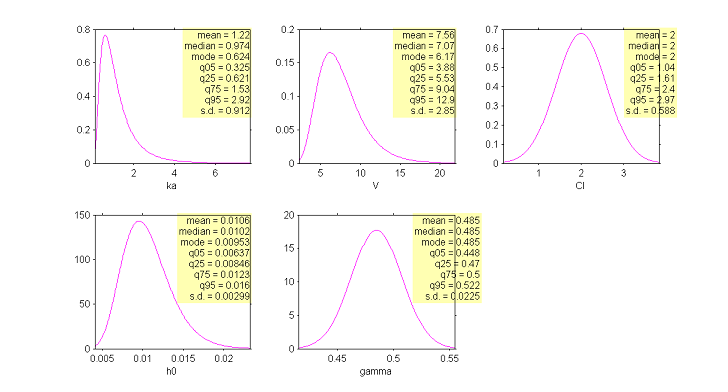

Parameter estimation can therefore be seen as estimating the reference values and variance of the random effects.

In addition to these numbers, it is important to be able to graphically represent these distributions in order to see them and therefore understand them better. In effect, the interpretation of certain parameters is not always simple. Of course, we know what a normal distribution represents and in particular its mean, median and mode, which are equal (see the distribution of $Cl$ below for instance). These measures of central tendency can be different among themselves for other asymmetric distributions such as the log-normal (see the distribution of $ka$).

Interpreting dispersion terms like $\omega_{ka}$ and $\omega_{V}$ is not obvious either when the parameter distributions are not normal. In such cases, quartiles or quantiles of order 5% and 95% (for example) may be useful for quantitively describing the variability of these parameters.

|

Estimation of the population distributions of the individual parameters of the model

|

Bayesian estimation of the population parameters

The Bayesian approach considers $\theta$ as a random vector with a prior distribution $\qth$. We can then define the posterior distribution of $\theta$:

We can estimate this conditional distribution and derive any statistics (posterior mean, standard deviation, percentiles, etc.) or derive the so-called Maximum a Posteriori (MAP) estimate of $\theta$:

The MAP estimate therefore maximizes a penalized version of the observed likelihood. In other words, maximum a posteriori estimation reduces to penalized maximum likelihood estimation. Suppose for instance that $\theta$ is a scalar parameter and the prior is a normal distribution with mean $\theta_0$ and variance $\gamma^2$. Then, the MAP estimate minimizes

The MAP estimate is a trade-off between the MLE which maximizes ${\llike}(\theta ; \by)$ and $\theta_0$ which minimizes $(\theta - \theta_0)^2$. The weight given to the prior directly depends on the variance of the prior distribution: the smaller $\gamma^2$ is, the closer to $\theta_0$ the MAP is. The limiting distribution considers that $\gamma^2=0$: this prior means here that $\theta$ is fixed as $\theta_0$ and no longer needs to be estimated.

Both the Bayesian and frequentist approaches have their supporters and detractors. But rather than being dogmatic and blindly following the same rule-book every time, we need to be pragmatic and ask the right methodological questions when confronted with a new problem.

We have to remember that Bayesian methods have been extremely successful, in particular for numerical calculations. For instance, (Bayesian) MCMC methods allow us to estimate more or less any conditional distribution coming from any hierarchical model, whereas frequentist approaches such as maximum likelihood estimation can be much more difficult to implement.

All things said, the problem comes down to knowing whether the data contains sufficient information to answer a given question, and whether some other information may be available to help answer it. This is the essence of the art of modeling: finding the right compromise between the confidence we have in the data and prior knowledge of the problem. Each problem is different and requires a specific approach. For instance, if all the patients in a pharmacokinetic trial have essentially the same weight, it is pointless to estimate a relationship between weight and the model's PK parameters using the trial data. In this case, the modeler would be better served trying to use prior information based on physiological criteria rather than just a statistical model.

Therefore, we can use information available to us, of course! Why not? But this information needs to be pertinent. Systematically using a prior for the parameters is not always meaningful. Can we reasonable suppose that we have access to such information? For continuous data for example, what does putting a prior on the residual error model's parameters mean in reality? A reasoned statistical approach consists of only including prior information for certain parameters (those for which we have real prior information) and having confidence in the data for the others.

$\monolix$ allows this hybrid approach which reconciles the Bayesian and frequentist approaches. A given parameter can be:

- a fixed constant if we have absolute confidence in its value or the data does not allow it to be estimated, essentially due to identifiability constraints.

- estimated by maximum likelihood, either because we have great confidence in the data or have no information on the parameter.

- estimated by introducing a prior and calculating the MAP estimate.

- estimated by introducing a prior and then estimating the posterior distribution.

We put aside dealing with the fixed components of $\theta$ in the following. Here are some possible situations:

- Combined maximum likelihood and maximum a posteriori estimation: decompose $\theta$ into $(\theta_E,\theta_{M})$ where $\theta_E$ are the components of $\theta$ to be estimated with MLE and $\theta_{M}$ those with a prior distribution whose posterior distribution is to be maximized. Then, $(\hat{\theta}_E , \hat{\theta}_{M} )$ below maximizes the penalized likelihood of $(\theta_E,\theta_{M})$:

- Combined maximum likelihood and posterior distribution estimation: here, decompose $\theta$ into $(\theta_E,\theta_{R})$ where $\theta_E$ are the components of $\theta$ to be estimated with MLE and $\theta_{R}$ those with a prior distribution whose posterior distribution is to be estimated. We propose the following strategy for estimating $\theta_E$ and $\theta_{R}$:

- Compute the maximum likelihood of $\theta_E$:

- Estimate the conditional distribution $\pmacro(\theta_{R} | \by ;\hat{\theta}_E)$.

where ${\llike} (\theta_E , \theta_{M}; \by) \ \ \eqdef \ \ \log\left(\py(\by | \theta_{M}; \theta_E)\right).$

It is then straightforward to extend this approach to more complex situations where some components of $\theta$ are estimated with MLE, others using MAP estimation and others still by estimating their conditional distributions.

Estimation of the Fisher information matrix

The variance of the estimator $\thmle$ and thus confidence intervals can be derived from the observed Fisher information matrix (F.I.M.), which itself is calculated using the observed likelihood (i.e., the pdf of the observations $\by$):

\(

\ofim(\thmle ; \by) \ \ \eqdef \ \ - \displaystyle{ \frac{\partial^2}{\partial \theta^2} }\log({\like}(\thmle ; \by)) .

\)

|

(1) |

Then, the variance-covariance matrix of the maximum likelihood estimator $\thmle$ can be estimated by the inverse of the observed F.I.M. Standard errors (s.e.) for each component of $\thmle$ are their standard deviations, i.e., the square-root of the diagonal elements of this covariance matrix. $\monolix$ also displays the (estimated) relative standard errors (r.s.e.), i.e., the (estimated) standard error divided by the value of the estimated parameter.

The F.I.M. can be used for detecting overparametrization of the structural model. In effect, if the model is poorly identifiable, certain estimators will be quite correlated and the F.I.M. will therefore be poorly conditioned and difficult to inverse. Suppose for example that we want to fit a two compartment PK model to the same data as before. The output is shown below. The large values for the relative standard errors for the inter-compartmental clearance $Q$ and the volume of the peripheral compartment $V_2$ mean that the data does not allow us to estimate well these two parameters.

The Fisher information criteria is also widely used in optimal experimental design. Indeed, minimizing the variance of the estimator corresponds to maximizing the information. Then, estimators and designs can be evaluated by looking at certain summary statistics of the covariance matrix (like the determinant or trace for instance).

Estimation of the individual parameters

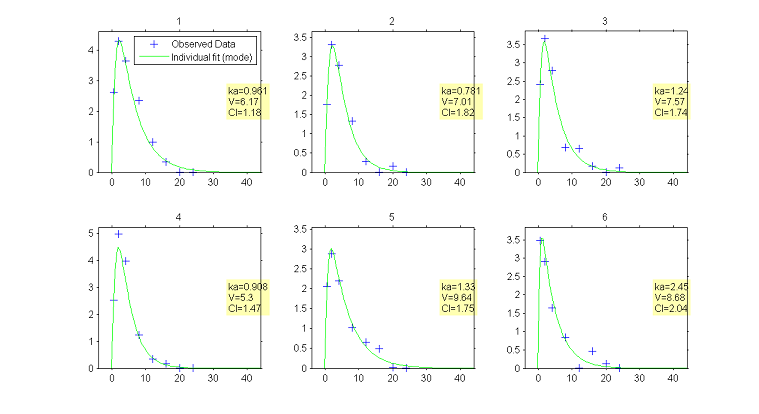

Once $\theta$ has been estimated, the conditional distribution $\pmacro(\psi_i | y_i ; \hat{\theta})$ of the individual parameters $\psi_i$ can be estimated for each individual $i$ using the Metropolis-Hastings algorithm. For each $i$, this algorithm generates a sequence $(\psi_i^{k}, k \geq 1)$ which converges in distribution to the conditional distribution $\pmacro(\psi_i | y_i ; \hat{\theta})$ and that can be used for estimating any summary statistic of this distribution (mean, standard deviation, quantiles, etc.).

The mode of this conditional distribution can be estimated using this sequence or by maximizing $\pmacro(\psi_i | y_i ; \hat{\theta})$ using numerical methods.

The choice of using the conditional mean or the conditional mode is arbitrary. By default, $\monolix$ uses the conditional mode, taking the philosophy that the "most likely" values of the individual parameters are the most suited for computing the "most likely" predictions.

|

Predicted concentrations for 6 individuals using the estimated conditional modes of the individual PK parameters

|

Estimation of the observed log-likelihood

Once $\theta$ has been estimated, the observed log-likelihood of $\hat{\theta}$ is defined as

The observed log-likelihood cannot be computed in closed form for nonlinear mixed effects models, but can be estimated using the methods described in the Estimation of the log-likelihood Section. The estimated log-likelihood can then be used for performing likelihood ratio tests and for computing information criteria such as AIC and BIC (see the Model evaluation Section).

Bibliography

Lixoft - Monolix 4.2

- http://www.lixoft.eu/products/monolix/product-monolix-overview ,2012

- BibtexAuthor : Lixoft

Title : Monolix 4.2

In : http://www.lixoft.eu/products/monolix/product-monolix-overview -

Address :

Date : 2012

Comets, E., Lavenu, A., Lavielle, M. - saemix: Stochastic Approximation Expectation Maximization (SAEM) algorithm. R package version 0.96.1

- http://cran.r-project.org/web/packages/saemix/index.html ,2013

- BibtexAuthor : Comets, E., Lavenu, A., Lavielle, M.

Title : saemix: Stochastic Approximation Expectation Maximization (SAEM) algorithm. R package version 0.96.1

In : http://cran.r-project.org/web/packages/saemix/index.html -

Address :

Date : 2013

The MathWorks - nlmefitsa: fit nonlinear mixed-effects model with stochastic EM algorithm. Matlab R2013a function

- http://www.mathworks.fr/fr/help/stats/nlmefitsa.html ,2013

- BibtexAuthor : The MathWorks

Title : nlmefitsa: fit nonlinear mixed-effects model with stochastic EM algorithm. Matlab R2013a function

In : http://www.mathworks.fr/fr/help/stats/nlmefitsa.html -

Address :

Date : 2013

Beal, S.L., Sheiner, L.B., Boeckmann, A., Bauer, R.J. - NONMEM users guides

- San Francisco, NONMEM Project Group, University of California ,1992

- BibtexAuthor : Beal, S.L., Sheiner, L.B., Boeckmann, A., Bauer, R.J.

Title : NONMEM users guides

In : San Francisco, NONMEM Project Group, University of California -

Address :

Date : 1992

Pinheiro, J.C., Bates, D.M. - Mixed effects models in S and S-PLUS

- Springer Verlag,2000

- BibtexAuthor : Pinheiro, J.C., Bates, D.M.

Title : Mixed effects models in S and S-PLUS

In : -

Address :

Date : 2000

Pinheiro, J., Bates, D., DebRoy, S., Sarkar, D. - the R Core team (2009) nlme: Linear and Nonlinear Mixed Effects Models. R package version 3.1-96

- R Foundation for Statistical Computing, Vienna ,2010

- BibtexAuthor : Pinheiro, J., Bates, D., DebRoy, S., Sarkar, D.

Title : the R Core team (2009) nlme: Linear and Nonlinear Mixed Effects Models. R package version 3.1-96

In : R Foundation for Statistical Computing, Vienna -

Address :

Date : 2010

Spiegelhalter, D., Thomas, A., Best, N., Lunn, D. - WinBUGS user manual

- Cambridge: MRC Biostatistics Unit ,2003

- BibtexAuthor : Spiegelhalter, D., Thomas, A., Best, N., Lunn, D.

Title : WinBUGS user manual

In : Cambridge: MRC Biostatistics Unit -

Address :

Date : 2003

SPSS - Linear mixed-effects modeling in SPSS. An introduction to the MIXED procedure

SAS - The NLMMIXED procedure, SAS/STAT 9.2 User's Guide