Difference between revisions of "Estimation of the log-likelihood"

m (→Estimation of the log-likelihood via linearization) |

m |

||

| (21 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | + | == Estimation using importance sampling == | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | The observed log-likelihood ${\llike}(\theta;\by)=\log({\like}(\theta;\by))$ can be estimated without requiring approximation of the model, using a Monte Carlo approach. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | The observed log-likelihood ${llike}(\theta;\by)=\log({\like}(\theta;\by))$ can be estimated without | ||

Since | Since | ||

{{Equation1 | {{Equation1 | ||

| − | |equation=<math> \begin{eqnarray} | + | |equation=<math>\begin{eqnarray} |

{\llike}(\theta;\by) &\eqdef& \log(\py(\by;\theta)) \\ | {\llike}(\theta;\by) &\eqdef& \log(\py(\by;\theta)) \\ | ||

&=& \sum_{i=1}^{N} \log(\pyi(y_i;\theta)), | &=& \sum_{i=1}^{N} \log(\pyi(y_i;\theta)), | ||

\end{eqnarray}</math> }} | \end{eqnarray}</math> }} | ||

| − | we | + | we can estimate $\log(\pyi(y_i;\theta))$ for each individual and derive an estimate of the log-likelihood as the sum of these individual log-likelihoods. We will now explain how to estimate $\log(\pyi(y_i;\theta))$ for any individual $i$. |

| + | |||

| + | Using the $\phi$-representation of the model, notice first that $\pyi(y_i;\theta)$ can be decomposed as follows: | ||

| − | |||

{{Equation1 | {{Equation1 | ||

|equation=<math>\begin{eqnarray} | |equation=<math>\begin{eqnarray} | ||

\pyi(y_i;\theta) &=& | \pyi(y_i;\theta) &=& | ||

| − | \displaystyle{ \int \pyipsii(y_i,\ | + | \displaystyle{ \int \pyipsii(y_i,\phi_i;\theta)\,d\phi_i }\\ |

| − | &=& \displaystyle{\int \ | + | &=& \displaystyle{\int \pcyiphii(y_i {{!}} \phi_i;\theta)\pphii(\phi_i;\theta)\,d\phi_i } \\ |

| − | &=& \esps{\ | + | &=& \esps{\qphii}{\pcyiphii(y_i {{!}} \phi_i;\theta)}. |

\end{eqnarray}</math> }} | \end{eqnarray}</math> }} | ||

| − | $\pyi(y_i;\theta)$ is expressed as a mean. It can therefore be approximated by an empirical mean using a Monte | + | Thus, $\pyi(y_i;\theta)$ is expressed as a mean. It can therefore be approximated by an empirical mean using a Monte Carlo procedure: |

<blockquote> | <blockquote> | ||

| − | 1. | + | 1. Draw $M$ independent values $\phi_i^{(1)}$, $\phi_i^{(2)}$, ..., $\phi_i^{(M)}$ from the normal distribution $\qphii(\, \cdot \, ; \theta)$. |

</blockquote> | </blockquote> | ||

<blockquote> | <blockquote> | ||

| − | 2. | + | 2. Estimate $ \pyi(y_i;\theta)$ with |

| + | </blockquote> | ||

{{Equation1 | {{Equation1 | ||

|equation=<math> | |equation=<math> | ||

| − | \hat{p}_{i,M} = \displaystyle{ \frac{1}{M} }\sum_{m=1}^{M}\ | + | \hat{p}_{i,M} = \displaystyle{\frac{1}{M} }\sum_{m=1}^{M}\pcyiphii(y_i {{!}} \phi_i^{(m)};\theta) . |

</math> }} | </math> }} | ||

| − | |||

| Line 293: | Line 43: | ||

{{Equation1 | {{Equation1 | ||

|equation=<math>\begin{eqnarray} | |equation=<math>\begin{eqnarray} | ||

| − | \esp{\hat{p}_{i,M} }&=& \esps{\ | + | \esp{\hat{p}_{i,M} }&=& \esps{\qphii}{\pcyiphii(y_i {{!}} \phi_i^{(m)};\theta)} \\ |

| − | &=& \pyi(y_i;\theta) | + | &=& \pyi(y_i;\theta) . |

\end{eqnarray}</math> }} | \end{eqnarray}</math> }} | ||

| − | Furthermore, it is | + | Furthermore, it is consistent since its variance decreases as $1/M$: |

{{Equation1 | {{Equation1 | ||

|equation=<math> | |equation=<math> | ||

| − | \var{\hat{p}_{i,M} }= \displaystyle{\frac{1}{M} }\vars{\ | + | \var{\hat{p}_{i,M} }= \displaystyle{ \frac{1}{M} }\vars{\qphii}{\pcyiphii(y_i {{!}} \phi_i^{(m)};\theta)}. |

</math> }} | </math> }} | ||

| − | We could | + | We could consider ourselves satisfied with this estimator since we "only" have to select $M$ large enough to get an estimator with a small variance. Nevertheless, we will see now that it is possible to improve the statistical properties of this estimator. |

| − | Nevertheless, we will see now that it is possible to improve the statistical properties of this estimator. | ||

| − | For any distribution $\ | + | For any distribution $\tqphii$ that is absolutely continuous with respect to the marginal distribution $\qphii$, we can write |

{{Equation1 | {{Equation1 | ||

|equation=<math>\begin{eqnarray} | |equation=<math>\begin{eqnarray} | ||

\pyi(y_i;\theta) &=& | \pyi(y_i;\theta) &=& | ||

| − | \displaystyle{ \int \ | + | \displaystyle{ \int \pyiphii(y_i,\phi_i;\theta)\,d\phi_i }\\ |

| − | &=& \displaystyle{\int \ | + | &=& \displaystyle{\int \pcyiphii(y_i {{!}} \phi_i;\theta)\frac{\pphii(\phi_i;\theta)}{\tpphii(\phi_i;\theta)}\tpphii(\phi_i;\theta)\,d\phi_i } \\ |

| − | &=& \esps{\ | + | &=& \esps{\tqphii}{\pcyiphii(y_i {{!}} \phi_i;\theta)\displaystyle{\frac{\pphii(\phi_i;\theta)}{\tpphii(\phi_i;\theta)} } }. |

\end{eqnarray}</math> }} | \end{eqnarray}</math> }} | ||

| − | We can now approximate $\pyi(y_i;\theta)$ via an '' | + | We can now approximate $\pyi(y_i;\theta)$ via an ''importance sampling'' integration method using $\tqphii$ as a proposal distribution: |

<blockquote> | <blockquote> | ||

| − | 1. | + | 1. Draw $M$ independent values $\phi_i^{(1)}$, $\phi_i^{(2)}$, ..., $\phi_i^{(M)}$ from the proposal distribution $\tqphii(\, \cdot \, ; \theta)$. |

| − | + | </blockquote> | |

| − | 2. | + | <blockquote> |

| + | 2. Estimate $ \pyi(y_i;\theta)$ with | ||

| + | </blockquote> | ||

{{Equation1 | {{Equation1 | ||

|equation=<math> | |equation=<math> | ||

| − | \hat{p}_{i,M} = \displaystyle{ \frac{1}{M} }\sum_{m=1}^{M}\ | + | \hat{p}_{i,M} = \displaystyle{\frac{1}{M} }\sum_{m=1}^{M}\pcyiphii(y_i {{!}} \phi_i^{(m)};\theta) \displaystyle{\frac{\pphii(\phi_i^{(m)};\theta)}{\tpphii(\phi_i^{(m)};\theta)} }. |

</math> }} | </math> }} | ||

| − | |||

| − | By construction, this new estimator is also unbiased and its variance decreases | + | By construction, this new estimator is also unbiased and its variance also decreases as $1/M$: |

{{Equation1 | {{Equation1 | ||

|equation=<math> | |equation=<math> | ||

| − | \var{\hat{p}_{i,M} }= \displaystyle{\frac{1}{M} }\vars{\ | + | \var{\hat{p}_{i,M} }= \displaystyle{\frac{1}{M} }\vars{\tqphii}{\pcyiphii(y_i {{!}} \phi_i^{(m)};\theta) \displaystyle{\frac{\pphii(\phi_i^{(m)};\theta)}{\tpphii(\phi_i^{(m)};\theta)} } }. |

</math> }} | </math> }} | ||

| − | There | + | There exists an infinite number of possible proposal distributions $\tpphii$ which all provide the same rate of convergence $1/M$. |

| − | The trick is to reduce the variance of the estimator by selecting a proposal | + | The trick is to reduce the variance of the estimator by selecting a proposal distribution so that the numerator is as small as possible. |

| − | Imagine that we use the conditional distribution $\ | + | Imagine that we use the conditional distribution $\qcphiiyi$ as the proposal. Then, for any $m=1,2,\ldots,M$, |

{{Equation1 | {{Equation1 | ||

|equation=<math>\begin{eqnarray} | |equation=<math>\begin{eqnarray} | ||

| − | \ | + | \pcyiphii(y_i {{!}} \phi_i^{(m)};\theta) \displaystyle{\frac{\pphii(\phi_i^{(m)};\theta)}{\tpphii(\phi_i^{(m)};\theta)} } |

| − | &=& \ | + | &=& \pcyiphii(y_i {{!}} \phi_i^{(m)};\theta) \displaystyle{\frac{\pphii(\phi_i^{(m)};\theta)}{\pcphiiyi(\phi_i^{(m)} {{!}} y_i;\theta)} }\\ |

| − | &=& \pyi(y_i;\theta) | + | &=& \pyi(y_i;\theta), |

\end{eqnarray}</math> }} | \end{eqnarray}</math> }} | ||

| − | which means that $\hat{p}_{i,M}=\pyi(y_i;\theta)$! Such an estimator is optimal since its variance is null and only one realization of $\ | + | which means that $\hat{p}_{i,M}=\pyi(y_i;\theta)$! Such an estimator is optimal since its variance is null and only one realization of $\qcphiiyi$ is required to exactly compute $\pyi(y_i;\theta)$. The problem is that it is not possible to generate the $\phi_i^{(m)}$ with this conditional distribution, since that would require to compute a normalizing constant, which here is precisely $\pyi(y_i;\theta)$. |

| − | Nevertheless, this conditional distribution can be estimated using the Metropolis-Hastings algorithm described in [[The Metropolis-Hastings algorithm for simulating the individual parameters | + | Nevertheless, this conditional distribution can be estimated using the Metropolis-Hastings algorithm described in [[The Metropolis-Hastings algorithm for simulating the individual parameters]] section and a practical proposal "close" to the optimal proposal $\qcphiiyi$ can be derived. We can then expect to get a very accurate estimate with a relatively small Monte Carlo size $M$. |

| − | In $\monolix$, the mean and variance of the conditional distribution $\ | + | In $\monolix$, the mean and variance of the conditional distribution $\qcphiiyi$ are estimated by Metropolis-Hastings for each individual $i$. Then, the $\phi_i^{(m)}$ are drawn with a noncentral $t$ distribution: |

{{Equation1 | {{Equation1 | ||

| − | |equation=<math>\ | + | |equation=<math> |

| + | \phi_i^{(m)} = \mu_i + \sigma_i \times T_{i,m} , | ||

| + | </math> }} | ||

| − | where $\mu_i$ and $\sigma^2_i$ are estimates of $\esp{\ | + | where $\mu_i$ and $\sigma^2_i$ are estimates of $\esp{\phi_i|y_i;\theta}$ and $\var{\phi_i|y_i;\theta}$, and $(T_{i,m})$ is a sequence of i.i.d. random variables distributed with a Student's $t$ distribution with $\nu$ degrees of freedom. |

| − | + | $\monolix$ uses the default value $\nu=5$. It is also possible to automatically test different degrees of freedom from the set $\{2, 5, 10, 20\}$ and to select the one that provides the smallest empirical variance for $\widehat{ {\llike} }_M(\theta;\by) = \sum_{i=1}^{N}\log(\hat{p}_{i,M})$. | |

| − | select the one that provides the smallest empirical variance for $\widehat{ {\llike} }_M(\theta;\by) = \sum_{i=1}^{N}\log(\hat{p}_{i,M})$. | ||

{{Remarks | {{Remarks | ||

| − | |title= | + | |title=Remarks |

| − | |text= Even if $\widehat{\like}_M(\theta;\by) = \prod_{i=1}^{N}\hat{p}_{i,M}$ is an unbiased estimator of ${\like}(\theta;\by)$, | + | |text=Even if $\widehat{ {\like} }_M(\theta;\by) = \prod_{i=1}^{N}\hat{p}_{i,M}$ is an unbiased estimator of ${\like}(\theta;\by)$, $\widehat{ {\llike} }_M(\theta;\by)$ is a biased estimator of $\log({\like}(\theta;\by))$. Indeed, by Jensen's inequality, we have that |

| − | $\widehat{ {\llike} }_M(\theta;\by)$ is a biased estimator of $\log({\like}(\theta;\by))$. Indeed, by Jensen's inequality, we have that | ||

{{Equation1 | {{Equation1 | ||

| − | |equation=<math> \esp{\log(\widehat{ {\like} }_M(\theta;\by))} \leq \log \esp{\widehat{ {\like} }_M(\theta;\by)} = \log ({\like}(\theta;\by)). </math> }} | + | |equation=<math> |

| + | \esp{\log(\widehat{ {\like} }_M(\theta;\by))} \leq \log \esp{\widehat{ {\like} }_M(\theta;\by)} = \log ( {\like}(\theta;\by)). | ||

| + | </math> }} | ||

| − | + | However, the bias decreases as $M$ increases, and also if $\widehat{ {\like} }_M(\theta;\by)$ is close to ${\like}(\theta;\by)$. It is therefore highly recommended to use a proposal as close as possible to the conditional distribution $\qcphiiyi$, which means having to estimate this conditional distribution before estimating the log-likelihood. | |

}} | }} | ||

| Line 380: | Line 132: | ||

{{Example | {{Example | ||

|title=Example | |title=Example | ||

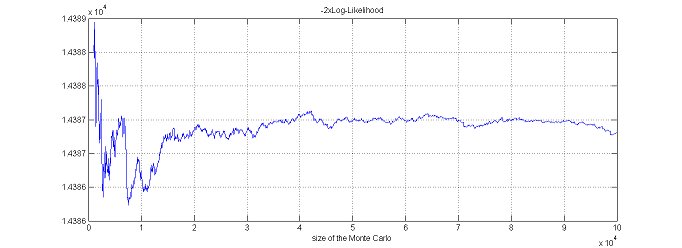

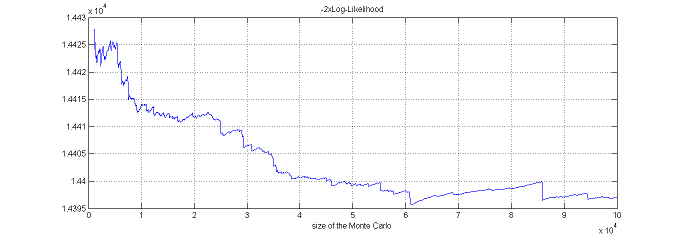

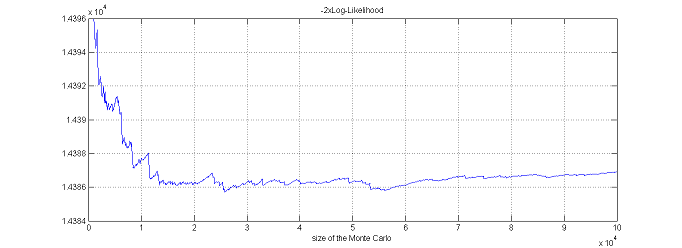

| − | |text= | + | |text= A mixture model is used for this example. The goal here is not to provide details about the model and the formulas used for implementation. We only want to highlight the importance of the choice of the proposal distribution for estimating the likelihood in complex models. The three figures below display estimates of the deviance ($-2\times {\llike} (\theta;\by)$) by importance sampling using different proposal distributions. The estimated deviance is displayed as a function of the Monte Carlo size. |

| − | + | ||

| + | In the first example, the conditional distributions of the individual parameters $\phi_i$ were estimated using the [[The Metropolis-Hastings algorithm for simulating the individual parameters|Metropolis-Hastings algorithm]], and noncentral $t$ distributions with 5 d.f. were used as proposal distributions. There is no bias and an accurate estimate is obtained with a small Monte Carlo size. The estimated deviance is 14386.8 (s.e. = 0.7). Here, only 100 Metropolis-Hastings iterations were required to correctly estimate the conditional mean and variance of the $\psi_i$. | ||

| + | |||

| + | |||

| + | {{ImageWithCaption|image=ll2.png|caption= }} | ||

| + | |||

| + | In the second example, only the last 30 iterations of [[The SAEM algorithm for estimating population parameters|SAEM]] were used for estimating the conditional mean and variance of the $\phi_i$. Then, noncentral $t$ distributions with 5 d.f. we used as proposal distributions. Now there is bias which decreases very slowly with the Monte Carlo size and which is non-negligible even with $10^5$ simulations. The estimated deviance is 14397.0 (s.e. = 2.7). | ||

| − | |||

| + | {{ImageWithCaption|image=ll1.png|caption= }} | ||

| − | + | ||

| + | In the third example, normal distributions were used as proposals. The parameters of these distributions were the mean and variance of the conditional distributions of the $\phi_i$ estimated using Metropolis-Hastings. Results are similar to those obtained with a $t$ distribution (estimated deviance=14386.9, s.e. = 1) but here, more simulations are required to eliminate the bias. | ||

| + | |||

| + | |||

| + | {{ImageWithCaption|image=ll3.png|caption= }} | ||

}} | }} | ||

<br> | <br> | ||

| − | |||

| + | == Estimation using linearization == | ||

| + | |||

| + | For continuous data models, an alternative to the importance sampling approach is to use a linearization of the model like that proposed in the [[Estimation of the observed Fisher information matrix#Estimation using linearization of the model|Estimation of the F.I.M. using a linearization of the model]] chapter to approximate the observed Fisher Information Matrix. Indeed, the marginal distribution of a continuous vector of observations $y_i$ can be approximated by a normal distribution. It is then straightforward to derive the associated likelihood. All of these calculations are described in the [[Estimation of the observed Fisher information matrix#Estimation using linearization of the model|Estimation of the F.I.M. using a linearization of the model]] chapter. | ||

| − | + | This method can be much faster than importance sampling. It should be used by modelers for model selection purposes during the initial runs, when the goal is to identify significant differences between models. Importance sampling should be used when a more precise evaluation of the log-likelihood is required. | |

| − | |||

| − | + | {{Back | |

| + | |link=Estimation of the observed Fisher information matrix }} | ||

Latest revision as of 15:43, 3 June 2013

Estimation using importance sampling

The observed log-likelihood ${\llike}(\theta;\by)=\log({\like}(\theta;\by))$ can be estimated without requiring approximation of the model, using a Monte Carlo approach.

Since

we can estimate $\log(\pyi(y_i;\theta))$ for each individual and derive an estimate of the log-likelihood as the sum of these individual log-likelihoods. We will now explain how to estimate $\log(\pyi(y_i;\theta))$ for any individual $i$.

Using the $\phi$-representation of the model, notice first that $\pyi(y_i;\theta)$ can be decomposed as follows:

Thus, $\pyi(y_i;\theta)$ is expressed as a mean. It can therefore be approximated by an empirical mean using a Monte Carlo procedure:

1. Draw $M$ independent values $\phi_i^{(1)}$, $\phi_i^{(2)}$, ..., $\phi_i^{(M)}$ from the normal distribution $\qphii(\, \cdot \, ; \theta)$.

2. Estimate $ \pyi(y_i;\theta)$ with

By construction, this estimator is unbiased:

Furthermore, it is consistent since its variance decreases as $1/M$:

We could consider ourselves satisfied with this estimator since we "only" have to select $M$ large enough to get an estimator with a small variance. Nevertheless, we will see now that it is possible to improve the statistical properties of this estimator.

For any distribution $\tqphii$ that is absolutely continuous with respect to the marginal distribution $\qphii$, we can write

We can now approximate $\pyi(y_i;\theta)$ via an importance sampling integration method using $\tqphii$ as a proposal distribution:

1. Draw $M$ independent values $\phi_i^{(1)}$, $\phi_i^{(2)}$, ..., $\phi_i^{(M)}$ from the proposal distribution $\tqphii(\, \cdot \, ; \theta)$.

2. Estimate $ \pyi(y_i;\theta)$ with

By construction, this new estimator is also unbiased and its variance also decreases as $1/M$:

There exists an infinite number of possible proposal distributions $\tpphii$ which all provide the same rate of convergence $1/M$. The trick is to reduce the variance of the estimator by selecting a proposal distribution so that the numerator is as small as possible.

Imagine that we use the conditional distribution $\qcphiiyi$ as the proposal. Then, for any $m=1,2,\ldots,M$,

which means that $\hat{p}_{i,M}=\pyi(y_i;\theta)$! Such an estimator is optimal since its variance is null and only one realization of $\qcphiiyi$ is required to exactly compute $\pyi(y_i;\theta)$. The problem is that it is not possible to generate the $\phi_i^{(m)}$ with this conditional distribution, since that would require to compute a normalizing constant, which here is precisely $\pyi(y_i;\theta)$.

Nevertheless, this conditional distribution can be estimated using the Metropolis-Hastings algorithm described in The Metropolis-Hastings algorithm for simulating the individual parameters section and a practical proposal "close" to the optimal proposal $\qcphiiyi$ can be derived. We can then expect to get a very accurate estimate with a relatively small Monte Carlo size $M$.

In $\monolix$, the mean and variance of the conditional distribution $\qcphiiyi$ are estimated by Metropolis-Hastings for each individual $i$. Then, the $\phi_i^{(m)}$ are drawn with a noncentral $t$ distribution:

where $\mu_i$ and $\sigma^2_i$ are estimates of $\esp{\phi_i|y_i;\theta}$ and $\var{\phi_i|y_i;\theta}$, and $(T_{i,m})$ is a sequence of i.i.d. random variables distributed with a Student's $t$ distribution with $\nu$ degrees of freedom.

$\monolix$ uses the default value $\nu=5$. It is also possible to automatically test different degrees of freedom from the set $\{2, 5, 10, 20\}$ and to select the one that provides the smallest empirical variance for $\widehat{ {\llike} }_M(\theta;\by) = \sum_{i=1}^{N}\log(\hat{p}_{i,M})$.

Estimation using linearization

For continuous data models, an alternative to the importance sampling approach is to use a linearization of the model like that proposed in the Estimation of the F.I.M. using a linearization of the model chapter to approximate the observed Fisher Information Matrix. Indeed, the marginal distribution of a continuous vector of observations $y_i$ can be approximated by a normal distribution. It is then straightforward to derive the associated likelihood. All of these calculations are described in the Estimation of the F.I.M. using a linearization of the model chapter.

This method can be much faster than importance sampling. It should be used by modelers for model selection purposes during the initial runs, when the goal is to identify significant differences between models. Importance sampling should be used when a more precise evaluation of the log-likelihood is required.